Motion Magnificator C++ library

v3.2.2

Table of contents

- Overview

- Versions

- Library files

- Key features and capabilities

- Supported pixel formats

- Library principles

- MotionMagnificator class description

- MotionMagnificator class declaration

- getVersion method

- initVFilter method

- setParam method

- getParam method

- executeCommand method

- processFrame method

- setMask method

- encodeSetParamCommand method of VFilter class

- encodeCommand method of VFilter class

- decodeCommand method of VFilter class

- decodeAndExecuteCommand method

- getDevices method

- Data structures

- VFilterParams class description

- Build and connect to your project

- Example

- Benchmark

- Demo application

Overview

MotionMagnificator C++ library amplifies subtle motion and temporal variations in digital video content. It allows camera system operators to detect moving objects that are not visible to the naked eye. Application area: perimeter security and drone detection. The library is implemented in C++ (C++17 standard) and using OpenMP (version >= 2.0, if supported on particular platform) and OpenCL (version >= 2.0, if supported on particular platform). This library is suitable for various types of cameras (daylight, SWIR, MWIR and LWIR). Each instance of the MotionMagnificator C++ class object performs frame-by-frame processing of a video data stream, processing each video frame independently. The library is only intended for not moving (or moving slowly) cameras or for PTZ cameras when observing in a certain sector. It can work on different platforms and automatically detects whether OpenMP or OpenCL are installed and what devices are supported. If those libraries are not available the algorithm will work only on one core on CPU, if they are available user can choose the type of implementation and device or number of threads. The library depends on open source VFilter library (provides interface as well as defines data structures for various video filters implementation, source code included, Apache 2.0 license). Additionally demo application depends on open source SimpleFileDialog (provides dialog to open files, source code included, Apache 2.0 license) and OpenCV library (for capturing and displaying video, linked, Apache 2.0 license).

Versions

Table 1 - Library versions.

| Version | Release date | What’s new |

|---|---|---|

| 1.0.0 | 13.09.2021 | - First version. |

| 2.0.0 | 23.10.2023 | - New interface created. - New algorithm implemented. |

| 2.0.1 | 13.11.2023 | - Frame class updated. |

| 2.0.2 | 28.12.2023 | - Demo application updated. - Examples updated. - Benchmark added. |

| 2.1.0 | 23.02.2024 | - Interface update |

| 3.0.0 | 27.02.2024 | - Interface changed according to VFilter interface class. |

| 3.0.1 | 24.03.2024 | - VFilter class updated. - SimpleFileDialog class updated. - Documentation updated. |

| 3.1.0 | 15.04.2024 | - Performance improved. - Documentation updated. |

| 3.1.1 | 21.05.2024 | - Submodules updated. - Documentation updated. |

| 3.2.0 | 18.07.2024 | - Added support for OpenMP. - Added support for OpenCL. - CMake architecture updated. |

| 3.2.1 | 15.12.2024 | - Fix OpenMP linkage in Linux. |

| 3.2.2 | 13.08.2025 | - Fix bug with building on Windows. |

Library files

The library supplied by source code only. The user would be given a set of files in the form of a CMake project (repository). The repository structure is shown below:

CMakeLists.txt ----------------------- Main CMake file of the library.

3rdparty ----------------------------- Folder with third-party libraries.

CMakeLists.txt ------------------- CMake file to include third-party libraries.

VFilter -------------------------- Folder with VFilter library source code.

src ---------------------------------- Folder with library source code.

CMakeLists.txt ------------------- CMake file of the library.

MotionMagnificator.h ------------- Main library header file.

MotionMagnificatorConfig.h.in ---- File for CMake to generate version header and config.

MotionMagnificator.cpp ----------- C++ class source file.

impl ----------------------------- Folder with implementation files.

MotionMagnificatorImpl.cpp --- C++ implementation with type detection file.

MotionMagnificatorImpl.h ----- Header file of the implementation.

demo --------------------------------- Folder for demo application files.

CMakeLists.txt ------------------- CMake file for demo application.

3rdaprty ------------------------- Folder with third-party libraries for demo application.

CMakeLists.txt --------------- CMake file to include third-party libraries.

SimpleFileDialog ------------- Folder with SimpleFileDialog library source code.

main.cpp ------------------------- Source C++ file of demo application.

example ------------------------------ Folder for simple example.

CMakeLists.txt ------------------- CMake file of example.

main.cpp ------------------------- Source C++ file of example,

benchmark ---------------------------- Folder with benchmark application.

CMakeLists.txt ------------------- CMake file of benchmark application.

main.cpp ------------------------- Source C++ file of benchmark application.

Key features and capabilities

Table 2 - Key features and capabilities.

| Parameter and feature | Description |

|---|---|

| Programming language | C++ (standard C++17) and using OpenMP (version >= 2.0, if supported on particular platform) and OpenCL (version >= 2.0, if supported on particular platform) No third-party dependencies. |

| Supported OS | Compatible with any operating system that supports the C++ compiler (C++17 standard). |

| Movement type | The library is able to magnify any kind of movement, but it is best suitable for tiny moving objects that are hard to spot with unarmed eye, e.g. drone. |

| Supported pixel formats | GRAY, YUV24, YUYV, UYVY, NV12, NV21, YV12, YU12. The library uses pixels intensity for video processing. If the pixel format of the image doesn’t include intensity channel it should be converted to proper format before applying magnification. |

| Maximum and minimum video frame size | The minimum size of video frames to be processed is 32x32 pixels, and the maximum size is 8192x8192 pixels. The size of the video frames to be processed has a significant impact on the computation speed. |

| Calculation speed | The processing time per video frame depends on the computing platform used. The processing time per video frame can be estimated with the demo application. |

| Type of algorithm for movement magnification | A modified Lagrangian pyramid algorithm with temporal filtering and amplification was implemented. Amplification factor can be dynamically changed by user to serve different requirements. |

| Working conditions | Algorithm implemented in the library is designed to work on fixed cameras (or moving slowly) with any background conditions. |

| Supported devices | Depending on the chosen TYPE parameter, the library can work in one-core CPU mode or with multithreading both on CPU (using OpenMP) and GPU (if device is supported by OpenCL library). |

Supported pixel formats

The Frame library which included in MotionMagnificator library contains Fourcc enum which defines supported pixel formats (Frame.h file). MotionMagnificator library supports only formats with intensity channel (GRAY, YUV24, YUYV, UYVY, NV12, NV21, YV12, YU12). The library uses the intensity channel for video processing. Fourcc enum declaration:

enum class Fourcc

{

/// RGB 24bit pixel format.

RGB24 = MAKE_FOURCC_CODE('R', 'G', 'B', '3'),

/// BGR 24bit pixel format.

BGR24 = MAKE_FOURCC_CODE('B', 'G', 'R', '3'),

/// YUYV 16bits per pixel format.

YUYV = MAKE_FOURCC_CODE('Y', 'U', 'Y', 'V'),

/// UYVY 16bits per pixel format.

UYVY = MAKE_FOURCC_CODE('U', 'Y', 'V', 'Y'),

/// Grayscale 8bit.

GRAY = MAKE_FOURCC_CODE('G', 'R', 'A', 'Y'),

/// YUV 24bit per pixel format.

YUV24 = MAKE_FOURCC_CODE('Y', 'U', 'V', '3'),

/// NV12 pixel format.

NV12 = MAKE_FOURCC_CODE('N', 'V', '1', '2'),

/// NV21 pixel format.

NV21 = MAKE_FOURCC_CODE('N', 'V', '2', '1'),

/// YU12 (YUV420) - Planar pixel format.

YU12 = MAKE_FOURCC_CODE('Y', 'U', '1', '2'),

/// YV12 (YVU420) - Planar pixel format.

YV12 = MAKE_FOURCC_CODE('Y', 'V', '1', '2'),

/// JPEG compressed format.

JPEG = MAKE_FOURCC_CODE('J', 'P', 'E', 'G'),

/// H264 compressed format.

H264 = MAKE_FOURCC_CODE('H', '2', '6', '4'),

/// HEVC compressed format.

HEVC = MAKE_FOURCC_CODE('H', 'E', 'V', 'C')

};

Table 3 - Bytes layout of supported RAW pixel formats. Example of 4x4 pixels image.

Library principles

The video motion magnification algorithm is implemented with multi-hypothesis support, incorporating temporal filtering and signal amplification. The algorithm involves the following sequential steps:

- Acquiring the source video frame and retrieve intensity channel if needed.

- Calculating pixel intensity values’ differences.

- Filtrating signal and applying amplification.

- Creating result image by applying proper pixels’ values to the intensity channel and then merged with incoming image.

The library is available as source code only. To utilize the library as source code, developers must incorporate the library files into their project. The usage sequence for the library is as follows:

- Include the library files in the project.

- Create an instance of the MotionMagnificator C++ class. If you need multiple parallel cameras processing you have to create multiple MotionMagnificator C++ class instances.

- If necessary, modify the default library parameters using the setParam(…) method.

- Create Frame class object for input frame.

- Call the processFrame(…) method to magnify video data stream.

MotionMagnificator class description

MotionMagnificator class declaration

MotionMagnificator.h file contains MotionMagnificator class declaration:

namespace cr

{

namespace video

{

/// Motion magnificator class.

class MotionMagnificator : public VFilter

{

public:

/// Class constructor.

MotionMagnificator();

/// Class destructor.

~MotionMagnificator();

/// Get string of current library version.

static std::string getVersion();

/// Initialize motion magnificator.

bool initVFilter(VFilterParams& params) override;

/// Set motion magnificator parameter.

bool setParam(VFilterParam id, float value) override;

/// Get motion magnificator parameter value.

float getParam(VFilterParam id) override;

/// Get all motion magnificator parameters.

void getParams(VFilterParams& params) override;

/// Execute action command.

bool executeCommand(VFilterCommand id) override;

/// Process frame.

bool processFrame(cr::video::Frame& frame) override;

/// Set motion magnification mask.

bool setMask(cr::video::Frame mask) override;

/// Decode and execute command.

bool decodeAndExecuteCommand(uint8_t* data, int size) override;

/// Get list of available GPU devices.

static std::vector<std::vector<std::string>> getDevices();

};

}

}

getVersion method

The getVersion() method returns string of current version of MotionMagnificator class. Method declaration:

static std::string getVersion();

Method can be used without MotionMagnificator class instance. Example:

cout << "MotionMagnificator v: " << MotionMagnificator::getVersion();

Console output:

MotionMagnificator v: 3.2.2

initVFilter method

The initVFilter(…) method initializes MotionMagnificator class by VFilterParams object which includes all video filter parameters. Method declaration:

bool initVFilter(VFilterParams& params) override;

| Parameter | Value |

|---|---|

| params | VFilterParams class object. |

Returns: TRUE if the video filter initialized or FALSE if not.

setParam method

The setParam(…) method designed to set new MotionMagnificator object parameter value. setParam(…) is thread-safe method. This means that the setParam(…) method can be safely called from any thread. Method declaration:

bool setParam(VFilterParam id, float value) override;

| Parameter | Description |

|---|---|

| id | Parameter ID according to VFilterParam enum. |

| value | Parameter value. Value depends on parameter ID. |

Returns: TRUE if the parameter was set or FALSE if not.

getParam method

The getParam(…) method designed to obtain motion magnificator parameter value. getParam(…) is thread-safe method. This means that the getParam(…) method can be safely called from any thread. Method declaration:

float getParam(VFilterParams id) override;

| Parameter | Description |

|---|---|

| id | Parameter ID according to VFilterParam enum. |

Returns: parameter value or -1 if the parameter is not supported.

executeCommand method

The executeCommand(…) method designed to execute motion magnificator command. executeCommand(…) is thread-safe method. This means that the executeCommand(…) method can be safely called from any thread. Method declaration:

bool executeCommand(VFilterCommand id) override;

| Parameter | Description |

|---|---|

| id | Command ID according to VFilterCommand enum. |

Returns: TRUE if the command was executed or FALSE if not.

processFrame method

The processFrame(…) method designed to perform magnification algorithm. The library provides thread-safe processFrame(…) method call. This means that the processFrame(…) method can be safely called from any thread. Method declaration:

bool processFrame(cr::video::Frame& frame) override;

| Parameter | Description |

|---|---|

| frame | Video Frame object for processing. Motion magnificator processes only GRAY, YUV24, YUYV, UYVY, NV12, NV21, YV12, YU12 formats. The library uses pixels intensity for video processing. If the pixel format of the image doesn’t include intensity channel it should be converted to proper format before applying magnification. |

Returns: TRUE if the video frame was processed FALSE if not. If motion magnificator disabled the method should return TRUE.

setMask method

The setMask(…) method designed to set magnification mask. The user can disable magnification in any areas of the video frame. For this purpose the user can create an image of any size and configuration with GRAY (preferable), NV12, NV21, YV12 or YU12 pixel format. Mask image pixel values equal to 0 prohibit motion magnification in the corresponding place of video frames. Any other mask pixel value other than 0 allows magnification at the corresponding location of video frames. The mask is used for motion magnification algorithms to compute a binary motion mask. The method can be called either before video frame processing or during video frame processing. Method declaration:

bool setMask(cr::video::Frame mask) override;

| Parameter | Description |

|---|---|

| mask | Image of magnification mask (Frame object). Must have GRAY (preferable), NV12, NV21, YV12 or YU12 pixel format. The size and configuration of the mask image can be any. If the size of the mask image differs from the size of processed frames, the mask will be scaled by the library for processing. |

Returns: TRUE if the the mask accepted or FALSE if not (not valid pixel format or empty).

encodeSetParamCommand method of VFilter class

The encodeSetParamCommand(…) static method encodes command to change any VFilter parameter value remote. To control any video filter remotely, the developer has to design his own protocol and according to it encode the command and deliver it over the communication channel. To simplify this, the VFilter class contains static methods for encoding the control command. The VFilter class provides two types of commands: a parameter change command (SET_PARAM) and an action command (COMMAND). encodeSetParamCommand(…) designed to encode SET_PARAM command. Method declaration:

static void encodeSetParamCommand(uint8_t* data, int& size, VFilterParam id, float value);

| Parameter | Description |

|---|---|

| data | Pointer to data buffer for encoded command. Must have size >= 11. |

| size | Size of encoded data. Size will be 11 bytes. |

| id | Parameter ID according to VFilterParam enum. |

| value | Parameter value. |

encodeSetParamCommand(…) is static and can be used without VFilter class instance. This method used on client side (control system). Command encoding example:

// Buffer for encoded data.

uint8_t data[11];

// Size of encoded data.

int size = 0;

// Random parameter value.

float outValue = static_cast<float>(rand() % 20);

// Encode command.

VFilter::encodeSetParamCommand(data, size, VFilterParam::LEVEL, outValue);

encodeCommand method of VFilter class

The encodeCommand(…) static method encodes action command for VFilter remote control. To control any video filter remotely, the developer has to design his own protocol and according to it encode the command and deliver it over the communication channel. To simplify this, the VFilter class contains static methods for encoding the control command. The VFilter class provides two types of commands: a parameter change command (SET_PARAM) and an action command (COMMAND). encodeCommand(…) designed to encode COMMAND (action command). Method declaration:

static void encodeCommand(uint8_t* data, int& size, VFilterCommand id);

| Parameter | Description |

|---|---|

| data | Pointer to data buffer for encoded command. Must have size >= 7. |

| size | Size of encoded data. Size will be 7 bytes. |

| id | Command ID according to VFilterCommand enum. |

encodeCommand(…) is static and can be used without VFilter class instance. This method used on client side (control system). Command encoding example:

// Buffer for encoded data.

uint8_t data[7];

// Size of encoded data.

int size = 0;

// Encode command.

VFilter::encodeCommand(data, size, VFilterCommand::RESTART);

decodeCommand method of VFilter class

The decodeCommand(…) static method decodes command on vidoe filter side (on edge device). Method declaration:

static int decodeCommand(uint8_t* data, int size, VFilterParam& paramId, VFilterCommand& commandId, float& value);

| Parameter | Description |

|---|---|

| data | Pointer to input command. |

| size | Size of command. Must be 11 bytes for SET_PARAM and 7 bytes for COMMAND (action command). |

| paramId | VFilter parameter ID according to VFilterParam enum. After decoding SET_PARAM command the method will return parameter ID. |

| commandId | VFilter command ID according to VFilterCommand enum. After decoding COMMAND the method will return command ID. |

| value | VFilter parameter value (after decoding SET_PARAM command). |

Returns: 0 - in case decoding COMMAND (action command), 1 - in case decoding SET_PARAM command or -1 in case errors.

decodeAndExecuteCommand method

The decodeAndExecuteCommand(…) method decodes and executes command encoded by encodeSetParamCommand(…) and encodeCommand(…) methods on video filter side. The library provides thread-safe decodeAndExecuteCommand(…) method call. This means that the decodeAndExecuteCommand(…) method can be safely called from any thread. Method declaration:

bool decodeAndExecuteCommand(uint8_t* data, int size) override;

| Parameter | Description |

|---|---|

| data | Pointer to input command. |

| size | Size of command. Must be 11 bytes for SET_PARAM or 7 bytes for COMMAND. |

Returns: TRUE if command decoded (SET_PARAM or COMMAND) and executed (action command or set param command).

getDevices method

The getDevices(…) method returns available devices according to OpenCL library. If OpenCL library is installed it will return list of platforms and for each platform names of devices. Method declaration:

static std::vector<std::vector<std::string>> getGpuDevices();

Returns: Vector of vectors of strings with device names, where position of vector of strings is platform number and strings store device names on this platform. Following code is an example how to print information about platforms:

std::vector<std::vector<std::string>> devices = MotionMagnificator::getDevices();

for (size_t i = 0; i < devices.size(); i++)

{

std::cout << "Platform " << i << ":" << std::endl;

for (size_t j = 0; j < devices[i].size(); j++)

{

std::cout << " Device nr " << j << ": " << devices[i][j] << std::endl;

}

}

Example of console output:

OpenCL was found. Available GPU devices for OpenCL implementation:

Platform 0:

Device nr 0: NVIDIA CUDA / NVIDIA GeForce RTX 4050 Laptop GPU

Platform 1:

Device nr 0: Intel(R) OpenCL Graphics / Intel(R) Iris(R) Xe Graphics

Platform 2:

Device nr 0: OpenCLOn12 / Intel(R) Iris(R) Xe Graphics

Device nr 1: OpenCLOn12 / NVIDIA GeForce RTX 4050 Laptop GPU

Device nr 2: OpenCLOn12 / Microsoft Basic Render Driver

Data structures

VFilterCommand enum

Enum declaration:

enum class VFilterCommand

{

/// Reset video filter algorithm.

RESET = 1,

/// Enable filter.

ON,

/// Disable filter.

OFF

};

Table 2 - Action commands description.

| Command | Description |

|---|---|

| RESET | Reset motion magnificator. |

| ON | Enable motion magnificator. |

| OFF | Disable motion magnificator. If OFF command is called, the library will reset algorithm at first. |

VFilterParam enum

Enum declaration:

enum class VFilterParam

{

/// Filter mode: 0 - off, 1 - on. Depends on implementation.

MODE = 1,

/// Enhancement level for particular filter, as a percentage from

/// 0% to 100%. May have another meaning depends on implementation.

LEVEL,

/// Processing time in microseconds. Read only parameter.

PROCESSING_TIME_MCSEC,

/// Type of the filter. Depends on the implementation.

TYPE,

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

CUSTOM_1,

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

CUSTOM_2,

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

CUSTOM_3

};

Table 3 - Params description.

| Parameter | Access | Description |

|---|---|---|

| MODE | read / write | Mode. Default: 0 - Off, 1 - On. If the magnificator is not activated, frame processing is not performed, it will be only forwarded. |

| LEVEL | read / write | Amplification factor adjusts the intensity of motion enhancement in videos, with higher values emphasizing motion changes and lower values maintaining the original motion characteristics. Supported values are from 0 to 100%. |

| PROCESSING_TIME_MCSEC | read only | Processing time in microseconds. Read only parameter. Used to check performance of MotionMagnificator and shows processing time for last frame. |

| TYPE | read / write | Type parameter defines the type of implementation of the algorithm, which should be used in the process of magnification. There are several types: - 0 - raw implementation - meant to be used on low-power CPU platforms, processes calculations on one thread. - 1 - OpenMP - multithreaded implementation working on CPU only (available only if OpenMP was detected on library compilation step). Number of used threads can be set by CUSTOM_3 parameter. - 2 - OpenCL - implementation utilizing OpenCL library (available only if OpenCL was detected on library compilation step). It is possible to change platform number (CUSTOM_1), device number (CUSTOM_2) and number of threads (CUSTOM_3). |

| CUSTOM_1 | read / write | Number of platform to use for computing. Note: Supported only when TYPE parameter is set to 2 (OpenCL). |

| CUSTOM_2 | read / write | Number of the device to use for computing. Note: Supported only when TYPE parameter is set to 2 (OpenCL). |

| CUSTOM_3 | read / write | Number of threads for computing (must be >=0). Value 0 means auto detection. Supported values can be different for different platforms and devices, if wrong value was set, the library will automatically choose the closest valid value. Note: Supported only when TYPE parameter is set to 1 (OpenMP) or 2 (OpenCL). In case OpenMP the library will set number of thread. In case OpenCL the library will check if the values is valid and set right value close to given number. |

VFilterParams class description

VFilterParams class declaration

VFilterParams class is used to provide video filter parameters structure. Also VFilterParams provides possibility to write/read params from JSON files (JSON_READABLE macro) and provides methods to encode and decode params. VFilterParams interface class declared in VFilter.h file. Class declaration:

namespace cr

{

namespace video

{

/// VFilter parameters class.

class VFilterParams

{

public:

/// Filter mode: 0 - off, 1 - on. Depends on implementation.

int mode{ 1 };

/// Enhancement level for particular filter, as a percentage from

/// 0% to 100%. May have another meaning depends on implementation.

float level{ 0.0f };

/// Processing time in microseconds. Read only parameter.

int processingTimeMcSec{ 0 };

/// Type of the filter. Depends on the implementation.

int type{ 0 };

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

float custom1{ 0.0f };

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

float custom2{ 0.0f };

/// VFilter custom parameter. Custom parameters used when particular image

/// filter has specific unusual parameter.

float custom3{ 0.0f };

/// Macro from ConfigReader to make params readable / writable from JSON.

JSON_READABLE(VFilterParams, mode, level, type, custom1, custom2, custom3)

/// operator =

VFilterParams& operator= (const VFilterParams& src);

/// Encode (serialize) params.

bool encode(uint8_t* data, int bufferSize, int& size,

VFilterParamsMask* mask = nullptr);

/// Decode (deserialize) params.

bool decode(uint8_t* data, int dataSize);

};

}

}

Table 5 - VFilterParams class fields description is related to VFilterParam enum description.

| Field | type | Description |

|---|---|---|

| mode | int | Mode. Default: 0 - Off, 1 - On. If the magnificator is not activated, frame processing is not performed, it will be only forwarded. |

| level | float | Amplification factor adjusts the intensity of motion enhancement in videos, with higher values emphasizing motion changes and lower values maintaining the original motion characteristics. Supported values are from 0 to 100%. |

| processingTimeMcSec | int | Processing time in microseconds. Read only parameter. Used to check performance of MotionMagnificator and shows processing time for last frame. |

| type | int | Type parameter defines the type of implementation of the algorithm, which should be used in the process of magnification. There are several types: - 0 - raw implementation - meant to be used on low-power CPU platforms, processes calculations on one thread. - 1 - OpenMP - multithreaded implementation working on CPU only (available only if OpenMP was detected on library compilation step). Number of used threads can be set by custom3 parameter. - 2 - OpenCL - implementation utilizing OpenCL library (available only if OpenCL was detected on library compilation step). It is possible to change platform number (custom1), device number (custom2) and number of threads (custom3). |

| custom1 | float | Number of platform to use for computing. Note: Supported only when TYPE parameter is set to 2 (OpenCL). |

| custom2 | float | Number of the device to use for computing. Note: Supported only when TYPE parameter is set to 2 (OpenCL). |

| custom3 | float | Number of threads for computing (must be >=0). Value 0 means auto detection. Supported values can be different for different platforms and devices, if wrong value was set, the library will automatically choose the closest valid value. Note: Supported only when TYPE parameter is set to 1 (OpenMP) or 2 (OpenCL). In case OpenMP the library will set number of thread. In case OpenCL the library will check if the values is valid and set right value close to given number. |

None: VFilterParams class fields listed in Table 5 have to reflect params set/get by methods setParam(…) and getParam(…).

Serialize VFilter params

VFilterParams class provides method encode(…) to serialize VFilter params. Serialization of VFilterParams is necessary in case when video filter parameters have to be sent via communication channels. Method provides options to exclude particular parameters from serialization. To do this method inserts binary mask (1 byte) where each bit represents particular parameter and decode(…) method recognizes it. Method declaration:

bool encode(uint8_t* data, int bufferSize, int& size, VFilterParamsMask* mask = nullptr);

| Parameter | Value |

|---|---|

| data | Pointer to data buffer. Buffer size must be >= 32 bytes. |

| bufferSize | Data buffer size. Buffer size must be >= 32 bytes. |

| size | Size of encoded data. |

| mask | Parameters mask - pointer to VFilterParamsMask structure. VFilterParamsMask (declared in VFilter.h file) determines flags for each field (parameter) declared in VFilterParams class. If user wants to exclude any parameters from serialization, he can put a pointer to the mask. If the user wants to exclude a particular parameter from serialization, he should set the corresponding flag in the VFilterParamsMask structure. |

Returns: TRUE if params encoded (serialized) or FALSE if not (buffer size < 32).

VFilterParamsMask structure declaration:

struct VFilterParamsMask

{

bool mode{ true };

bool level{ true };

bool processingTimeMcSec{ true };

bool type{ true };

bool custom1{ true };

bool custom2{ true };

bool custom3{ true };

};

Example without parameters mask:

// Prepare parameters.

cr::video::VFilterParams params;

params.level = 80.0f;

// Encode (serialize) params.

int bufferSize = 128;

uint8_t buffer[128];

int size = 0;

params.encode(buffer, bufferSize, size);

Example with parameters mask:

// Prepare parameters.

cr::video::VFilterParams params;

params.level = 80.0;

// Prepare mask.

cr::video::VFilterParams mask;

// Exclude level.

mask.level = false;

// Encode (serialize) params.

int bufferSize = 128;

uint8_t buffer[128];

int size = 0;

params1.encode(buffer, bufferSize, size, &mask);

Deserialize VFilter params

VFilterParams class provides method decode(…) to deserialize params. Deserialization of VFilterParams is necessary in case when it is needed to receive params via communication channels. Method automatically recognizes which parameters were serialized by encode(…) method. Method declaration:

bool decode(uint8_t* data, int dataSize);

| Parameter | Value |

|---|---|

| data | Pointer to data buffer with serialized params. |

| dataSize | Size of command data. |

Returns: TRUE if params decoded (deserialized) or FALSE if not.

Example:

// Prepare parameters.

cr::video::VFilterParams params1;

params1.level = 80;

// Encode (serialize) params.

int bufferSize = 128;

uint8_t buffer[128];

int size = 0;

params1.encode(buffer, bufferSize, size);

// Decode (deserialize) params.

cr::video::VFilterParams params2;

params2.decode(buffer, size);

Read params from JSON file and write to JSON file

VFilter depends on open source ConfigReader library which provides method to read params from JSON file and to write params to JSON file. Example of writing and reading params to JSON file:

// Prepare random params.

cr::video::VFilterParams params1;

params1.mode = 1;

params1.level = 10.1f;

params1.processingTimeMcSec = 10;

params1.type = 2;

params1.custom1 = 22.3;

params1.custom2 = 23.4;

params1.custom3 = 24.5;

// Save to JSON.

cr::utils::ConfigReader configReader1;

if (!configReader1.set(params1, "Params"))

std::cout << "Can't set params" << std::endl;

if (!configReader1.writeToFile("VFilterParams.json"))

std::cout << "Can't write to file" << std::endl;

// Read params from file.

cr::utils::ConfigReader configReader2;

if (!configReader2.readFromFile("VFilterParams.json"))

std::cout << "Can't read from file" << std::endl;

cr::video::VFilterParams params2;

if (!configReader2.get(params2, "Params"))

std::cout << "Can't get params" << std::endl;

VFilterParams.json will look like:

{

"Params": {

"custom1": 22.3,

"custom2": 23.4,

"custom3": 24.5,

"level": 10.1,

"mode": 1,

"type": 2

}

}

Build and connect to your project

Typical commands to build MotionMagnificator library:

cd MotionMagnificator

mkdir build

cd build

cmake ..

make

If you want connect MotionMagnificator to your CMake project as source code you can make follow. For example, if your repository has structure:

CMakeLists.txt

src

CMakeList.txt

yourLib.h

yourLib.cpp

Create folder 3rdparty in your repository and copy MotionMagnificator repository folder there. New structure of your repository:

CMakeLists.txt

src

CMakeList.txt

yourLib.h

yourLib.cpp

3rdparty

MotionMagnificator

Create CMakeLists.txt file in 3rdparty folder. CMakeLists.txt should contain:

cmake_minimum_required(VERSION 3.13)

################################################################################

## 3RD-PARTY

## dependencies for the project

################################################################################

project(3rdparty LANGUAGES CXX)

################################################################################

## SETTINGS

## basic 3rd-party settings before use

################################################################################

# To inherit the top-level architecture when the project is used as a submodule.

SET(PARENT ${PARENT}_YOUR_PROJECT_3RDPARTY)

# Disable self-overwriting of parameters inside included subdirectories.

SET(${PARENT}_SUBMODULE_CACHE_OVERWRITE OFF CACHE BOOL "" FORCE)

################################################################################

## CONFIGURATION

## 3rd-party submodules configuration

################################################################################

SET(${PARENT}_SUBMODULE_MOTION_MAGNIFICATOR ON CACHE BOOL "" FORCE)

if (${PARENT}_SUBMODULE_MOTION_MAGNIFICATOR)

SET(${PARENT}_MOTION_MAGNIFICATOR ON CACHE BOOL "" FORCE)

SET(${PARENT}_MOTION_MAGNIFICATOR_BENCHMARK OFF CACHE BOOL "" FORCE)

SET(${PARENT}_MOTION_MAGNIFICATOR_DEMO OFF CACHE BOOL "" FORCE)

SET(${PARENT}_MOTION_MAGNIFICATOR_EXAMPLE OFF CACHE BOOL "" FORCE)

endif()

################################################################################

## INCLUDING SUBDIRECTORIES

## Adding subdirectories according to the 3rd-party configuration

################################################################################

if (${PARENT}_SUBMODULE_MOTION_MAGNIFICATOR)

add_subdirectory(MotionMagnificator)

endif()

File 3rdparty/CMakeLists.txt adds folder MotionMagnificator to your project and excludes test applications and examples from compiling (by default test applications and example excluded from compiling if MotionMagnificator included as sub-repository). The new structure of your repository will be:

CMakeLists.txt

src

CMakeList.txt

yourLib.h

yourLib.cpp

3rdparty

CMakeLists.txt

MotionMagnificator

Next, you need to include the 3rdparty folder in the main CMakeLists.txt file of your repository. Add the following string at the end of your main CMakeLists.txt:

add_subdirectory(3rdparty)

Next, you have to include MotionMagnificator library in your src/CMakeLists.txt file:

target_link_libraries(${PROJECT_NAME} MotionMagnificator)

Done!

Example

A simple application shows how to use the MotionMagnificator library. The application opens a video file “test.mp4”.

#include <opencv2/opencv.hpp>

#include "MotionMagnificator.h"

int main(void)

{

// Open video file "test.mp4".

cv::VideoCapture videoSource;

if (!videoSource.open("test.mp4"))

return -1;

// Create frames.

cv::Mat bgrImg;

int width = (int)videoSource.get(cv::CAP_PROP_FRAME_WIDTH);

int height = (int)videoSource.get(cv::CAP_PROP_FRAME_HEIGHT);

cr::video::Frame frameYuv(width, height, cr::video::Fourcc::YUV24);

// Create motion magnificator and set initial params.

cr::video::MotionMagnificator magnificator;

magnificator.setParam(cr::video::VFilterParam::TYPE, 0); // CPU

magnificator.setParam(cr::video::VFilterParam::MODE, 1);

magnificator.setParam(cr::video::VFilterParam::LEVEL, 50);

// Main loop.

while (true)

{

// Capture next video frame. Default BGR pixel format.

videoSource >> bgrImg;

if (bgrImg.empty())

{

// Set initial video position to replay.

videoSource.set(cv::CAP_PROP_POS_FRAMES, 0);

continue;

}

// Convert BGR to YUV for motion magnificator.

cv::Mat yuvImg(height, width, CV_8UC3, frameYuv.data);

cv::cvtColor(bgrImg, yuvImg, cv::COLOR_BGR2YUV);

// Magnify movement with default params.

magnificator.processFrame(frameYuv);

// Convert result YUV to BGR to display.

cv::cvtColor(yuvImg, bgrImg, cv::COLOR_YUV2BGR);

// Show video.

cv::imshow("VIDEO", bgrImg);

if (cv::waitKey(1) == 27)

return 0;

}

return 1;

}

Benchmark

Benchmark allows you to evaluate the speed of the algorithm on different platforms. This is a console application that allows you to enter initial parameters after startup. After entering the parameters, the application will create 100 synthetic images and process them. After processing 100 images, the application will calculate and show statistics (average / minimum / maximum processing time per frame). Benchmark console interface:

Console output for CPU, one thread option:

=================================================

MotionMagnificator v3.2.0 benchmark

Average processing time per frame

=================================================

Set frame width: 1920

Set frame height: 1080

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | : 0

Time 6.576 -> 7.337 -> 9.47 msec

Time 6.556 -> 7.391 -> 12.945 msec

Time 6.669 -> 7.586 -> 12.512 msec

Time 6.716 -> 7.72 -> 13.716 msec

Console output for CPU, multi threads with OpenMP option:

=================================================

MotionMagnificator v3.2.0 benchmark

Average processing time per frame

=================================================

Set frame width: 1920

Set frame height: 1080

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | : 1

Set number of threads: 4

Time 2.926 -> 3.75 -> 6.194 msec

Time 2.962 -> 3.667 -> 6.472 msec

Time 2.983 -> 3.538 -> 6.422 msec

Time 2.993 -> 3.553 -> 11.449 msec

Console output for GPU with OpenCL option:

=================================================

MotionMagnificator v3.2.0 benchmark

Average processing time per frame

=================================================

Set frame width: 1920

Set frame height: 1080

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | : 2

OpenCL was found. Available GPU devices for OpenCL implementation:

Platform 0:

Device nr 0: NVIDIA CUDA / NVIDIA GeForce RTX 4050 Laptop GPU

Platform 1:

Device nr 0: Intel(R) OpenCL Graphics / Intel(R) Iris(R) Xe Graphics

Platform 2:

Device nr 0: OpenCLOn12 / Intel(R) Iris(R) Xe Graphics

Device nr 1: OpenCLOn12 / NVIDIA GeForce RTX 4050 Laptop GPU

Device nr 2: OpenCLOn12 / Microsoft Basic Render Driver

Enter platform number: 1

Enter device number: 0

Enter number of GPU threads (0 - for auto detection): 0

Time 7.236 -> 7.838 -> 10.778 msec

Time 7.24 -> 7.809 -> 11.027 msec

Time 7.248 -> 7.851 -> 10.902 msec

Time 7.217 -> 7.82 -> 10.514 msec

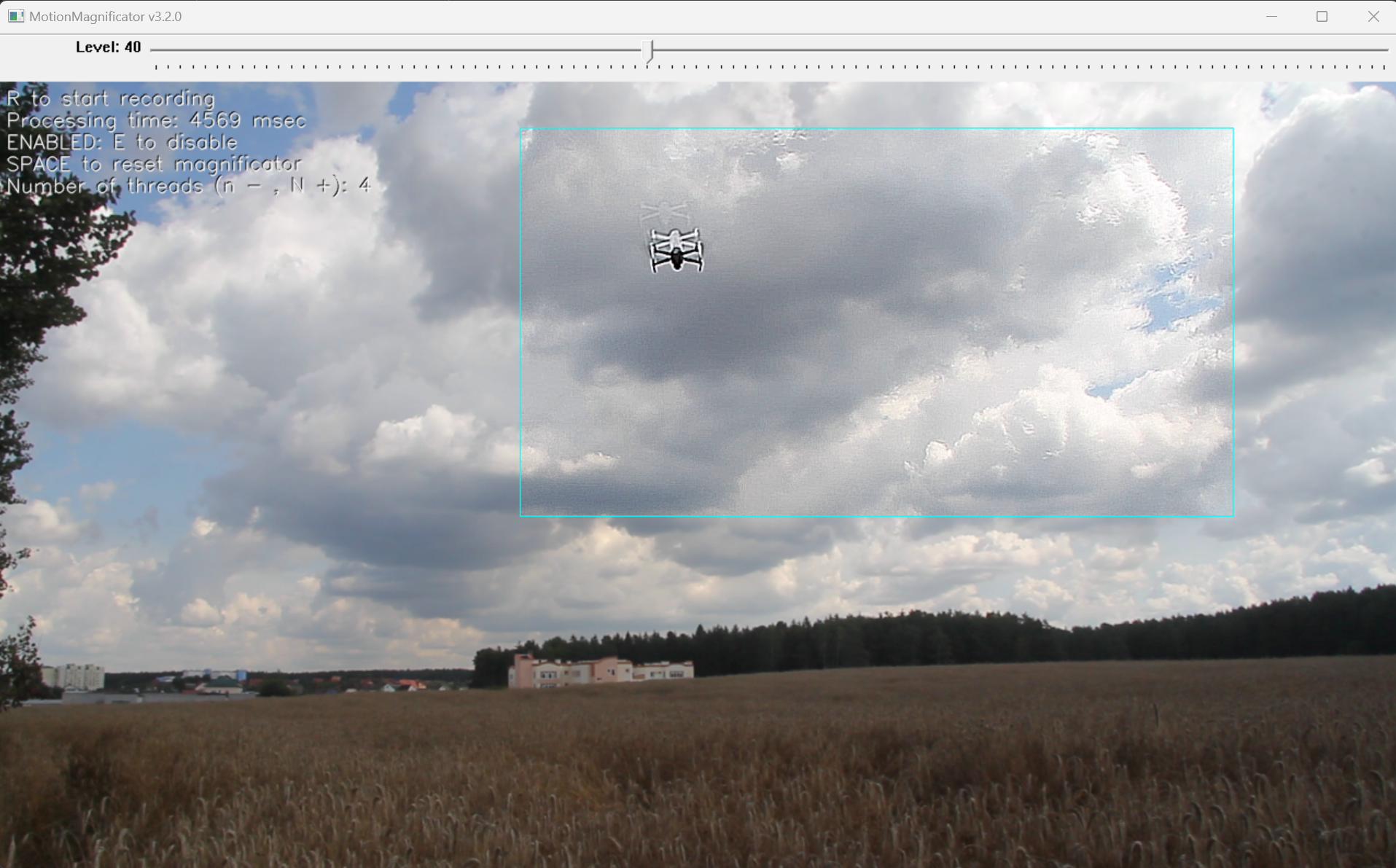

Demo application

The demo application is intended to evaluate the performance of the MotionMagnificator C++ library. The application allows you to evaluate the motion magnification algorithm with chosen video file. It is a console application and can be used as an example of MotionMagnificator library usage. The application uses the OpenCV (version 4.5 and higher) library for capturing video, recording video, displaying video, and forming a simple user interface. The demo application does not require installation. The demo application compiled for Windows OS x64 (Windows 10 and never) as well for Linux OS (few distros, to get demo application for specific Linux distro sent us request). Table 6. shows list of files of demo application.

Table 6 - List of files of demo application (example for Windows OS).

| File | Description |

|---|---|

| MotionMagnificatorDemo.exe | Demo application executable file for windows OS. |

| opencv_world480.dll | OpenCV library file version 4.8.0 for Windows x64. |

| opencv_videoio_msmf480_64.dll | OpenCV library file version 4.8.0 for Windows x64. |

| opencv_videoio_ffmpeg480_64.dll | OpenCV library file version 4.8.0 for Windows x64. |

| VC_redist.x64 | Installer of necessary system libraries for Windows x64. |

| src | Folder with application source code. |

| test.mp4 | Test video file. |

To launch demo application run MotionMagnificatorDemo.exe executable file on Windows x64 OS or run commands on Linux:

sudo chmod +x MotionMagnificatorDemo

./MotionMagnificatorDemo

If a message about missing system libraries appears (on Windows OS) when launching the application, you must install the VC_redist.x64.exe program, which will install the system libraries required for operation. After start user must chose platform:

=================================================

MotionMagnificator v3.2.0 demo application

=================================================

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | :

Depends on platform user will be asked about addition params. Example for CPU:

=================================================

MotionMagnificator v3.2.0 demo application

=================================================

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | : 1

Set number of threads: 4

Open file dialog? (y/n)

Example for OpenMP:

MotionMagnificator v3.2.0 demo application

OpenCL was found. Available GPU devices for OpenCL implementation:

Platform 0:

Device nr 0: Intel(R) OpenCL Graphics / Intel(R) UHD Graphics

Enter platform number: 0

Enter device number: 0

Enter global work size: 720

Open file dialog? (y/n) y

Example for OpenCL:

=================================================

MotionMagnificator v3.2.0 demo application

=================================================

Select platform | 0 - CPU, one thread | 1 - CPU, multi threads with OpenMP | 2 - GPU with OpenCL | : 2

OpenCL was found. Available GPU devices for OpenCL implementation:

Platform 0:

Device nr 0: NVIDIA CUDA / NVIDIA GeForce RTX 4050 Laptop GPU

Platform 1:

Device nr 0: Intel(R) OpenCL Graphics / Intel(R) Iris(R) Xe Graphics

Platform 2:

Device nr 0: OpenCLOn12 / Intel(R) Iris(R) Xe Graphics

Device nr 1: OpenCLOn12 / NVIDIA GeForce RTX 4050 Laptop GPU

Device nr 2: OpenCLOn12 / Microsoft Basic Render Driver

Enter platform number: 1

Enter device number: 0

Enter number of GPU threads (0 - for auto detection): 0

Open file dialog? (y/n)

After user should chose between file dialog and initialization string (for OpenCV). If user will not chose file dialog option the application will ask to enter video source initialization string: user can manually set file name, camera number (0, 1, 2 etc.) or RTSP initialization string (example: rtsp://192.168.0.1:7031/live). After that the user will see the user interface as shown bellow (if video source open and region of interest is set).

To control the application, it is necessary that the main video display window was active (in focus), and also it is necessary that the English keyboard layout was activated without CapsLock mode. The program is controlled by the keyboard and mouse (magnification ROI control).

Table 7 - Control buttons.

| Button | Description | |

|---|---|---|

| ESC | Exit the application. If video recording is active, it will be stopped. | |

| SPACE | Reset motion magnificator. | |

| R | Start/stop video recording. When video recording is enabled, a files dst_[date and time].avi (result video) is created in the directory with the application executable file. Recording is performed of what is displayed to the user. To stop the recording, press the R key again. During video recording, the application shows a warning message. | |

| E | Enable/disable amplification. | |

| N / n | Supported only when Type is set to 1 (OpenMP) - capital ‘N’ letter increases number of threads used to compute on CPU and small ‘n’ letter decreases this number. |

Magnification level can be changed by slider. The user can set the magnification mask (mark a rectangular area where image will be magnified). In order to set a rectangular magnification area it is necessary to draw a line with the mouse with the left button pressed from the left-top corner of the required magnification area to the right-bottom corner. The magnification area will be marked in blue color as shown in the image.