VPipeline C++ library

v4.0.0

Table of contents

- Overview

- Versions

- Library files

- Video tracker centering mode

- Pan and tilt unit control notes

- VPipeline class description

- Enums

- VPipelineParams class description

- Build and connect to your project

- Example

Overview

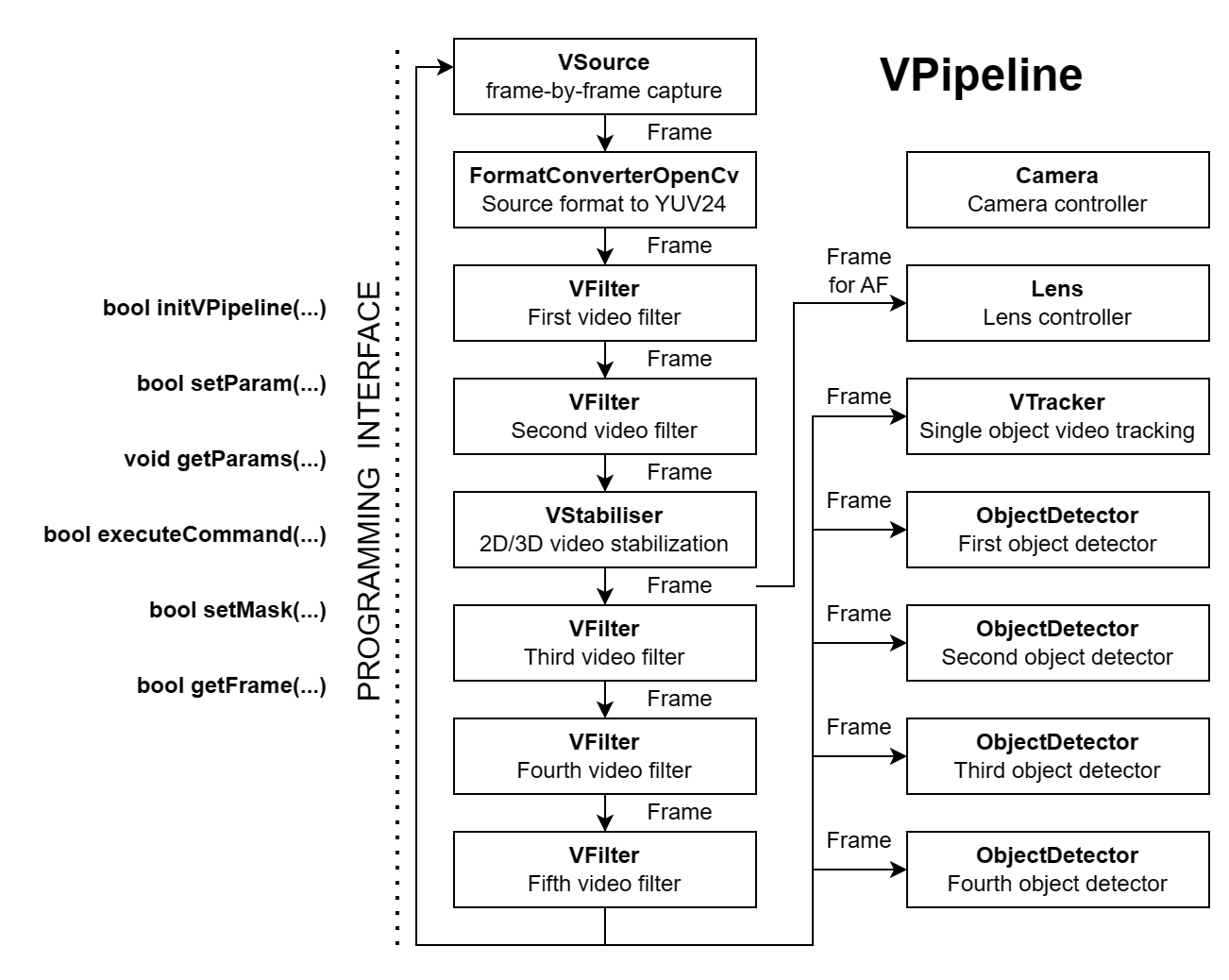

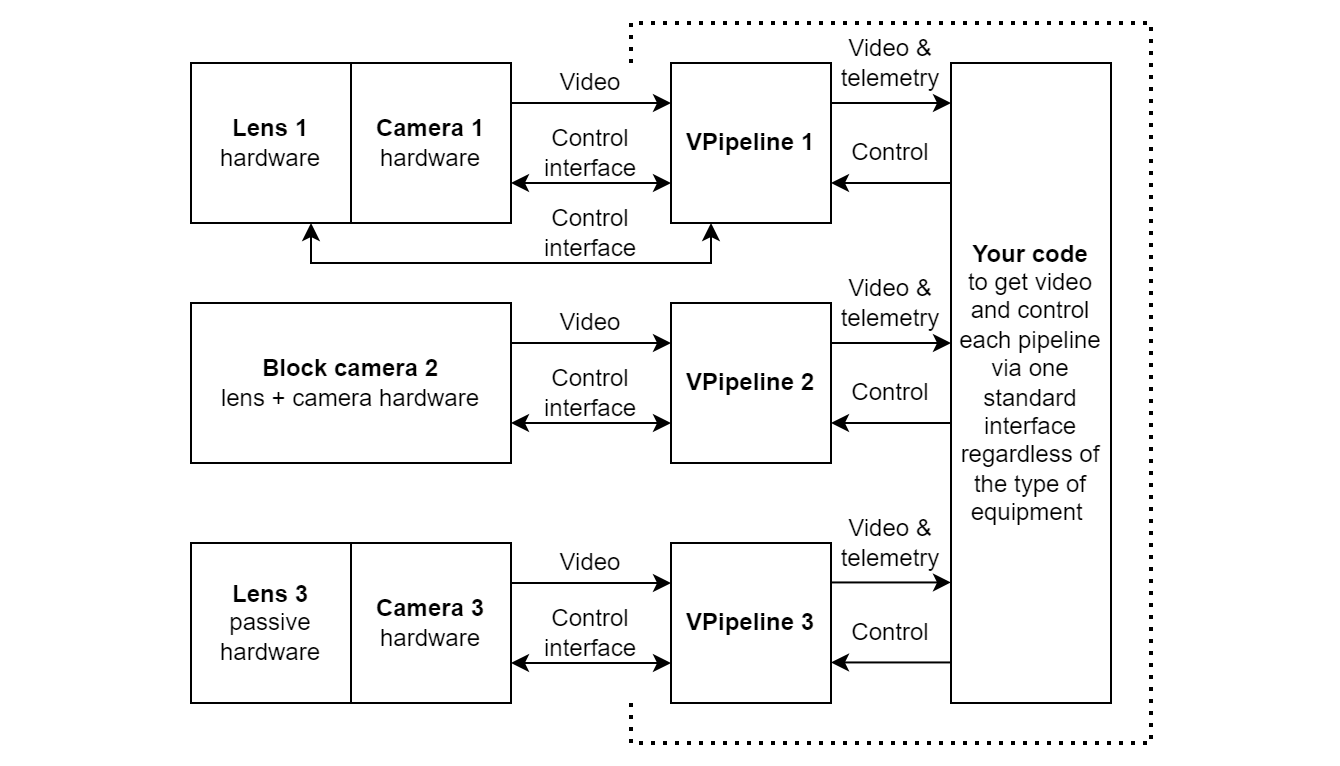

The VPipeline C++ library is the full functional video processing pipeline with interfaces to connect different video filters, video trackers, object detectors and video stabilization modules. The motivation of VPipeline library is to provide simple interface to complex video processing pipeline as well as camera and lens control, regardless of hardware. The library strikes two main goals: provide video processing pipeline and provide remote control interface for pipeline (encoding / decoding commands and telemetry). It can be used inside edge device for video processing as well as on client side to control video processing pipeline. The VPipeline library allows users to set their own implementation of particular algorithms through interfaces (VFilter, VTracker, VStabiliser, VSource and ObjectDetector). Thus the library offers flexibility in algorithms usage with keeping standard interfaces to control each of them. The library depends on: Camera library (provides interface to control / obtain camera / image sensor parameters, source code included, Apache 2.0 license), Lens library (provides interface to control / obtaining lens parameters, source code included, Apache 2.0 license), ObjectDetector library (provides interface for object detectors, source code included, Apache 2.0 license), VSource library (provides interface for video capture, source code included, Apache 2.0 license), VTracker library (provides interface for video tracking, source code included, Apache 2.0 license), VStabiliser library (provides interface for video stabilization, source code included, Apache 2.0 license), VFilter library (provides interface for video filtering, source code included, Apache 2.0 license), Logger (provides functions for logging, source code included, Apache 2.0 license) and FormatConverter (provides functions to convert pixel formats, source code included). The VPipeline library doesn’t include implementation of particular video processing algorithms, but includes interfaces for them. The library uses C++17 standard and doesn’t have any dependency to be specially installed in OS. The library compatible with Linux and Windows. Video processing pipeline structure:

The processing inside VPipeline is distributed between multiple threads with synchronization between them. Each processing thread is responsible for particular algorithm (video filter, stabilization, video tracking and detection). User can initialize: camera controller module, lens controller module, video stabilizer module, video tracker module, up to seven video filters modules and up to 7 object detector modules. The library implements video processing pipeline (conveyor). The library includes following video processing steps: waiting next video frame from video source, converting source pixel format to YUV24, filtering frame by video filter 0, filtering frame by video filter 1, filtering frame by video filter 2, video stabilization, filtering frame by video filter 3, filtering frame by video filter 4, filtering frame by video filter 5, video tracking with frame centering in tracking mode if required, filtering frame by video filter 6, and after - object detection by up to 7 detectors. User can get video frame from pipeline in YUV24 format after video filter 6. Each steps waits results from previous and notifies next after processing. Each step performs processing in separate thread. The video processing FPS is determined by the FPS of the video source, as well as the slowest module, except for object detectors (if video processing in any module exceeds the time period between frames).

The VPipeline library provides simple interface to control pipeline including camera and lens control. Usage principles: user initializes pipeline by calling initVPipeline(…) method. The initVPipeline(…) method accepts big structure of parameters which includes parameters for all functional modules of the pipeline. Also user should put pointer to objects: video stabilizer, video tracker, object detectors (up to 7), video source, camera controller, lens controller and video filters (up to 7). If particular pointer not initialized the pipeline will initialize default “dummy” module which does nothing. After initialization the pipeline runs internal processing threads. User can control pipeline by changing parameters (setParam(…) method), executing command (executeCommand(…) method) and setting detection and filtering masks for object detectors and video filters (setMask(…) method). All this methods are non-blocking and thread-safe. To get all actual pipeline params including tracking and detection results user uses getParams(…) method. To get new video frame from video source after preprocessing (after sixth video filter) the getFrame(…) method used. Thus the user have standard interface to camera, lens, video source, video tracker and others regardless of hardware type. VPipeline class provides the same set of commands and functions for any combination of lens and camera. If user doesn’t use lens and camera interface the pipeline can work without it and without any other functional module if needed. The VPipeline library simplifies embedded video processing software structure. Usage example:

Versions

Table 1 - Library versions.

| Version | Release date | What’s new |

|---|---|---|

| 1.0.0 | 18.05.2022 | First version. |

| 2.0.0 | 18.02.2024 | - Interface changed. - Added new video processing modules. - Video streaming functions excluded. - Documentation updated. |

| 3.0.0 | 14.03.2024 | - Full functional implementation. - Added VFilter interface. - Added test application. - Documentation updated. |

| 3.0.1 | 26.03.2024 | - Camera class updated. - FormatConverterOpenCv class updated. - Lens class updated. - Logger class updated. - ObjectDetector class updated. - VFilter class updated. - VSource class updated. - VStabiliser class updated. - VTracker class updated. - Documentation updated. |

| 3.0.2 | 21.05.2024 | - Submodules updated. - Documentation updated. |

| 3.0.3 | 08.08.2024 | - CMake structure updated. - Minor casting issues fixed. |

| 3.0.4 | 29.09.2024 | - Update VStabiliser interface. |

| 3.0.5 | 03.04.2025 | - Logger submodule update. |

| 3.0.6 | 18.04.2025 | - VStabiliser submodule update. |

| 4.0.0 | 04.01.2026 | - Excluded opencv dependencies. - Added additional slots for filters and object detectors. - Added video tracking centering mode. |

Library files

The VPipeline C++ library is provided as source code. Users are given a set of files in the form of a CMake project (repository). The repository structure is outlined below:

CMakeLists.txt --------------------------- Main CMake file of the library.

3rdparty --------------------------------- Folder with third-party libraries.

CMakeLists.txt ----------------------- CMake file to include third-party libraries.

Camera ------------------------------- Folder with the Camera library source code.

Lens --------------------------------- Folder with the Lens library source code.

ObjectDetector ----------------------- Folder with the ObjectDetector library source code.

VSource ------------------------------ Folder with the VSource library source code.

VStabiliser -------------------------- Folder with the VStabiliser library source code.

VTracker ----------------------------- Folder with the VTracker library source code.

VFilter ------------------------------ Folder with VFilter library source code.

FormatConverter ---------------------- Folder with FormatConverter library source code.

DummyCamera -------------------------- Folder with "dummy" camera implementation.

CMakeLists.txt ------------------- CMake file for "dummy" camera.

DummyCamera.cpp ------------------ Source code file of the library.

DummyCamera.h -------------------- Header file of the library.

DummyCameraVersion.h ------------- Header file with library version.

DummyCameraVersion.h.in ---------- CMake service file to generate version header.

DummyLens ---------------------------- Folder with "dummy" lens implementation.

CMakeLists.txt ------------------- CMake file for "dummy" lens.

DummyLens.cpp -------------------- Source code file of the library.

DummyLens.h ---------------------- Header file of the library.

DummyLensVersion.h --------------- Header file with library version.

DummyLensVersion.h.in ------------ CMake service file to generate version header.

DummyObjectDetector ------------------ Folder with "dummy" object detector implementation.

CMakeLists.txt ------------------- CMake file for "dummy" object detector.

DummyObjectDetector.cpp ---------- Source code file of the library.

DummyObjectDetector.h ------------ Header file of the library.

DummyObjectDetectorVersion.h ----- Header file with library version.

DummyObjectDetectorVersion.h.in -- CMake service file to generate version header.

DummyVFilter ------------------------- Folder with "dummy" video filter implementation.

CMakeLists.txt ------------------- CMake file for "dummy" video filter.

DummyVFilter.cpp ----------------- Source code file of the library.

DummyVFilter.h ------------------- Header file of the library.

DummyVFilterVersion.h ------------ Header file with library version.

DummyVFilterVersion.h.in --------- CMake service file to generate version header.

DummyVSource ------------------------- Folder with "dummy" video source implementation.

CMakeLists.txt ------------------- CMake file for "dummy" video source.

DummyVSource.cpp ----------------- Source code file of the library.

DummyVSource.h ------------------- Header file of the library.

DummyVSourceVersion.h ------------ Header file with library version.

DummyVSourceVersion.h.in --------- CMake service file to generate version header.

DummyVStabiliser --------------------- Folder with "dummy" video stabilizer implementation.

CMakeLists.txt ------------------- CMake file for "dummy" video stabilizer.

DummyVStabiliser.cpp ------------- Source code file of the library.

DummyVStabiliser.h --------------- Header file of the library.

DummyVStabiliserVersion.h -------- Header file with library version.

DummyVStabiliserVersion.h.in ----- CMake service file to generate version header.

DummyVTracker ------------------------ Folder with "dummy" video tracker implementation.

CMakeLists.txt ------------------- CMake file for "dummy" video tracker library.

DummyVTracker.cpp ---------------- Source code file of the library.

DummyVTracker.h ------------------ Header file of the library.

DummyVTrackerVersion.h ----------- Header file with library version.

DummyVTrackerVersion.h.in -------- CMake service file to generate version header.

src -------------------------------------- Folder with source code of the library.

CMakeLists.txt ----------------------- CMake file of the library.

VPipeline.cpp ------------------------ C++ implementation file.

VPipeline.h -------------------------- Header file with VPipeline class declaration.

VPipelineVersion.h ------------------- Header file which includes version of the library.

VPipelineVersion.h.in ---------------- CMake service file to generate version header.

tests ------------------------------------ Folder with test applications.

GuiTest ------------------------------ Folder with test application with GUI.

CMakeLists.txt ------------------- CMake file of the test application.

main.cpp ------------------------- Source code file of test application.

ProtocolTest ------------------------- Folder with protocol test application.

CMakeLists.txt ------------------- CMake file of the test application.

main.cpp ------------------------- Source code file of test application.

Video tracker centering mode

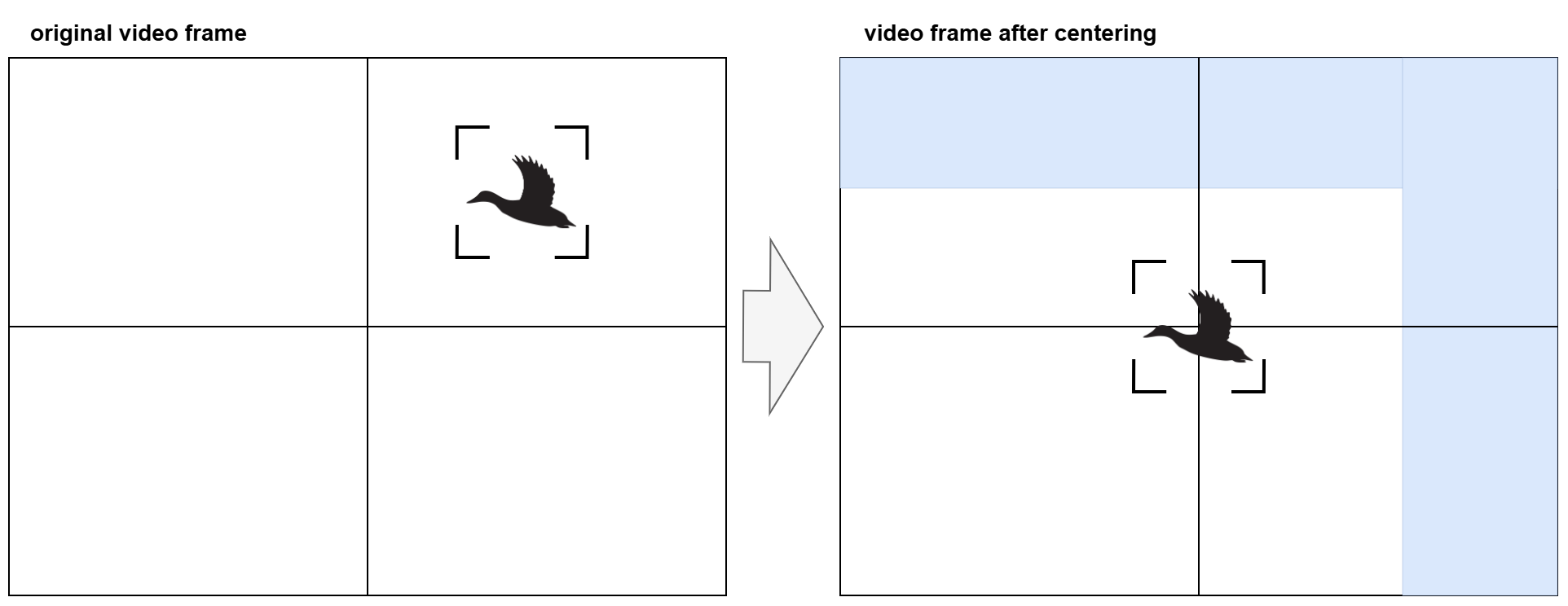

Video tracking centering mode can be applied to image if video tracker mode in TRACKING mode (tracking object). It is designed to shift image to keep tracking object in the center of screen. For some cases it is more comfortable for user to see tracking object exactly in the center of the screen. Video tracking centering mode also provide very stable image despite of camera movement and vibrations which is necessary for some video filters (for example, for gas leak detection) and for some object detectors (for example, motion detector). Bellow is illustration of video tracker centering mode:

On the image above you can see original video frame with tracking rectangle and image after video tracker centering (image offset). After centering the tracking rectangle will be in the center of the screen. Blue part of video frame illustrates the image can have part of original image due to principle of image shifting. The VPipeline library has parameters enable/disable and max horizontal and vertical image offset (see GeneralParam enum). If max image offset >0 and tracking rectangle offset > limits the library will move image according to limits only. It is necessary to prevent unexpected big image shift. Maximum offset can be not more than half of image.

Pan and tilt unit control notes

In pan-tilt cameras and gimbals to track object (moving camera to keep object in the center of screen) it is necessary to calculate position of object relative to center of screen (we assume that center of screen coincides with optical center of the system). VPipeline library perform video stabilization and after video tracking on stabilized image. So, the user get from pipeline stabilized image and all coordinates related to stabilized image. To calculate control signal for pan-tilt unit the user need to have coordinates on not stabilized image (relatively to the optical center). Using coordinates as is can make system unstable especially when object located near the center of image. To recalculate position of tracking rectangle to the not stabilized image use should use transformation parameters from video stabilizer and image offsets from video tracker centering (if enabled). The code to recalculate tracking rectangle coordinates to not stabilized image:

// Get params from pipeline.

VTrackerParams params;

pipeline.getParams(params);

// Get tracking rectangle position.

float xResult = (float)params.videoTracker.rectX;

float yResult = (float)params.videoTracker.rectY;

// Get video stabilizer transformation params.

float dx = (float)params.videoStabilizer.instantXOffset;

float dy = (float)params.videoStabilizer.instantYOffset;

float da = params.videoStabilizer.instantAOffset;

// Calculate source position.

int xSource = (int)((xResult - dx) * cos(-da) - (yResult - dy) * sin(-da));

int ySource = (int)((xResult - dx) * sin(-da) + (yResult - dy) * cos(-da));

// Add video tracker centering offsets.

xSource -= params.general.trackerCenteringOffsetX;

ySource -= params.general.trackerCenteringOffsetY;

VPipeline class description

VPipeline class declaration

VPipeline.h file contains VPipeline class declaration. Class declaration:

class VPipeline

{

public:

/// Class constructor.

VPipeline();

/// Class destructor.

~VPipeline();

/// Get string of current class version.

static std::string getVersion();

/// Init video processing pipeline.

bool initVPipeline(

VPipelineParams& params,

cr::video::VSource* videoSource = nullptr,

cr::lens::Lens* lens = nullptr,

cr::camera::Camera* camera = nullptr,

cr::vstab::VStabiliser* videoStabiliser = nullptr,

cr::vtracker::VTracker* videoTracker = nullptr,

cr::detector::ObjectDetector* objectDetector0 = nullptr,

cr::detector::ObjectDetector* objectDetector1 = nullptr,

cr::detector::ObjectDetector* objectDetector2 = nullptr,

cr::detector::ObjectDetector* objectDetector3 = nullptr,

cr::detector::ObjectDetector* objectDetector4 = nullptr,

cr::detector::ObjectDetector* objectDetector5 = nullptr,

cr::detector::ObjectDetector* objectDetector6 = nullptr,

cr::video::VFilter* videoFilter0 = nullptr,

cr::video::VFilter* videoFilter1 = nullptr,

cr::video::VFilter* videoFilter2 = nullptr,

cr::video::VFilter* videoFilter3 = nullptr,

cr::video::VFilter* videoFilter4 = nullptr,

cr::video::VFilter* videoFilter5 = nullptr,

cr::video::VFilter* videoFilter6 = nullptr);

/// Get video processing pipeline params.

void getParams(VPipelineParams& params);

/// Set module parameter.

bool setParam(VPipelineModule module, int id, float value);

/// Execute command.

bool executeCommand(VPipelineModule module,

int id,

float arg1 = -1.0f,

float arg2 = -1.0f,

float arg3 = -1.0f);

/// Get source video frame after processing.

bool getFrame(cr::video::Frame& frame, int timeoutMsec = 0);

/// Set detection mask to module.

bool setMask(VPipelineModule module, cr::video::Frame mask);

/// Encode set param command.

static void encodeSetParamCommand(uint8_t* data,

int& size,

VPipelineModule module,

int id,

float value);

/// Encode action command.

static void encodeCommand(uint8_t* data,

int& size,

VPipelineModule module,

int id,

float arg1 = 0.0f,

float arg2 = 0.0f,

float arg3 = 0.0f);

/// Decode command.

static int decodeCommand(uint8_t* data,

int size,

VPipelineModule& module,

int& id,

float& value1,

float& value2,

float& value3);

/// Decode and execute command.

bool decodeAndExecuteCommand(uint8_t* data, int size);

};

getVersion method

The getVersion() static method returns string of current version of VPipeline class. Method declaration:

static std::string getVersion();

Method can be used without VPipeline class instance. Example:

cout << "VPipeline class version: " << VPipeline::getVersion() << endl;

Console output:

VPipeline class version: 4.0.0

initVPipeline method

The initVPipeline(…) method initializes video processing pipeline. This method initializes all functional modules by parameters given by user and starts internal processing threads. This method must be called first before any other methods (excepts static methods to encode / decode commands and params). User should put pointers to objects for particular modules (camera controller, lens controller, video source, video stabilizer, video tracker, object detectors and video filters). If user doesn’t provide pointer to particular module the pipeline will initialize “dummy” module which does nothing. After initialization method will run internal video processing threads. Method declaration:

bool initVPipeline(

VPipelineParams& params,

cr::video::VSource* videoSource = nullptr,

cr::lens::Lens* lens = nullptr,

cr::camera::Camera* camera = nullptr,

cr::vstab::VStabiliser* videoStabiliser = nullptr,

cr::vtracker::VTracker* videoTracker = nullptr,

cr::detector::ObjectDetector* objectDetector0 = nullptr,

cr::detector::ObjectDetector* objectDetector1 = nullptr,

cr::detector::ObjectDetector* objectDetector2 = nullptr,

cr::detector::ObjectDetector* objectDetector3 = nullptr,

cr::detector::ObjectDetector* objectDetector4 = nullptr,

cr::detector::ObjectDetector* objectDetector5 = nullptr,

cr::detector::ObjectDetector* objectDetector6 = nullptr,

cr::video::VFilter* videoFilter0 = nullptr,

cr::video::VFilter* videoFilter1 = nullptr,

cr::video::VFilter* videoFilter2 = nullptr,

cr::video::VFilter* videoFilter3 = nullptr,

cr::video::VFilter* videoFilter4 = nullptr,

cr::video::VFilter* videoFilter5 = nullptr,

cr::video::VFilter* videoFilter6 = nullptr);

| Parameter | Description |

|---|---|

| params | VPipelineParams class object which contains all pipeline parameters including parameters for functional modules. |

| videoSource | Pointer to VSource object. The pipeline will use this object to capture video from video source. User should provide particular implementation of VSource interface. If user doesn’t provide pointer to video source the pipeline will create “dummy” video source module which generates artificial video frames itself with default parameters (resolution and FPS). If user provides pointer to video source implementation and if initialization failed the pipeline will initialize “dummy” video source. |

| lens | Pointer to Lens object. The pipeline will use this object to control lens. User should provide particular implementation of Lens interface. If user doesn’t provide pointer to lens controller the pipeline will create “dummy” lens controller. If particular controller has Lens and Camera interfaces (for block cameras which include image sensor and lens) user should put the same pointer separately for lens and camera interfaces. |

| camera | Pointer to Camera object. The pipeline will use this object to control camera. User should provide particular implementation of Camera interface. If user doesn’t provide pointer to camera controller the pipeline will create “dummy” camera controller. If particular controller has Lens and Camera interfaces (for block cameras which include image sensor and lens) user should put the same pointer separately for lens and camera interfaces. |

| videoStabiliser | Pointer to VStabiliser object. The pipeline will use this object to stabilize video frames. User should provide particular implementation of VStabiliser interface. If user doesn’t provide pointer to video stabilizer this function will be not available for user. |

| videoTracker | Pointer to VTracker object. The pipeline will use this object for video tracking of single object. User should provide particular implementation of VTracker interface. If user doesn’t provide pointer to video tracker the pipeline will create “dummy” video tracker. |

| objectDetector0 | Pointer to ObjectDetector 0 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector1 | Pointer to ObjectDetector 1 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector2 | Pointer to ObjectDetector 2 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector3 | Pointer to ObjectDetector 3 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector4 | Pointer to ObjectDetector 4 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector5 | Pointer to ObjectDetector 5 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| objectDetector6 | Pointer to ObjectDetector 6 object. The pipeline will use this object to detect objects on video. Object detector can be: motion detector, changes detector, neural networks etc. But all detectors must be compatible with ObjectDetector interface to be understandable by pipeline. VPipeline includes seven slots for object detectors. User can obtain detection results with getParams(…) method. If user doesn’t provide pointer to object detector the pipeline will create “dummy” object detector. |

| videoFilter0 | Pointer to VFilter 0 object. User can assign any video filter compatible with VFilter interface. Usually first video filter is image flip function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter1 | Pointer to VFilter 1 object. User can assign any video filter compatible with VFilter interface. Usually second video filter is digital zoom function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter2 | Pointer to VFilter 2 object. User can assign any video filter compatible with VFilter interface. Usually third video filter is noise removal function (denoise). VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter3 | Pointer to VFilter 3 object. User can assign any video filter compatible with VFilter interface. Usually fourth video filter is defog / dehaze function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter4 | Pointer to VFilter 4 object. User can assign any video filter compatible with VFilter interface. Usually fifth video filter is motion magnification function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter5 | Pointer to VFilter 5 object. User can assign any video filter compatible with VFilter interface. Usually sixth video filter is sharpening function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

| videoFilter6 | Pointer to VFilter 6 object. User can assign any video filter compatible with VFilter interface. Usually seventh video filter is custom processing function. VPipeline includes seven slots for video filters. User can obtain video filter params with getParams(…) method. If user doesn’t provide pointer to video filter the pipeline will create “dummy” video filter. |

Returns: TRUE if pipeline initialized (or already initialized) or FALSE if not. The method will return FALSE only if video source initialization failed. The pipeline firstly will try initialize video source provided by user (if provided) and in case any errors will try initialize “dummy” video source.

getParams method

The getParams(…) method returns all video processing pipeline parameters including detection and video tracking results. The method will obtain current params from each module (video source, video stabilizer, video tracker, object detector and video filters). Method declaration:

void getParams(VPipelineParams& params);

| Parameter | Description |

|---|---|

| params | VPipelineParams class which contains all pipeline parameters including parameters for functional modules, detection and tracking results. |

setParam method

The setParam(…) changes parameter of particular video processing pipeline module. User must specify functional module of pipeline to set parameter value. The method will check module type and will call setParam(…) method of appropriate module (functional module object). Depends on particular implementation of functional module new parameters value can be accepted but not set. To control current parameters values use getParams(…) method. Method declaration:

bool setParam(VPipelineModule module, int id, float value);

| Parameter | Description |

|---|---|

| module | Functional module of pipeline according to VPipelineModule enum. Changing parameters available for all functional modules. |

| id | Parameter ID. Parameter ID depends on functional module. See example bellow. Appropriate enum must be converted to int value. Changing parameters available for all functional modules: VSourceParam - Video source parameters enum. Described in VSource interface class. LensParam - Lens parameters enum. Described in Lens interface class. CameraParam - Camera parameters enum. Described in Camera interface class. VTrackerParam - Video tracker parameters enum. Described in VTracker interface class. VStabiliserParam - Video stabilizer parameters enum. Described in VStabiliser interface class. VFilterParam - Video filter parameters enum. Described in VFilter interface class. GeneralParam - General pipeline parameters enum. Described in VPipeline.h file. ObjectDetectorParam - Object detector parameters enum. Described in ObjectDetector interface class. |

| value | Parameter value. Depends on parameter ID and functional module. To check valid parameters values see description of appropriate enum for particular implementation. |

Returns: TRUE if parameter was accepted or FALSE if not.

Example how to set parameters for different video processing pipeline modules:

// Set video source parameter.

pipeline->setParam(VPipelineModule::VIDEO_SOURCE, (int)VSourceParam::FOCUS_MODE, 0);

// Set lens parameter.

pipeline->setParam(VPipelineModule::LENS, (int)LensParam::FOCUS_POS, 1000);

// Set camera parameter.

pipeline->setParam(VPipelineModule::CAMERA, (int)CameraParam::BRIGHTNESS, 100);

// Set video tracker parameter.

pipeline->setParam(VPipelineModule::VIDEO_TRACKER, (int)VTrackerParam::NUM_CHANNELS, 3);

// Set video stabilizer parameter.

pipeline->setParam(VPipelineModule::VIDEO_STABILISER, (int)VStabiliserParam::TYPE, 1);

// Set video filter parameter.

pipeline->setParam(VPipelineModule::VIDEO_FILTER_1, (int)VFilterParam::LEVEL, 20);

// Set video filter parameter.

pipeline->setParam(VPipelineModule::VIDEO_FILTER_2, (int)VFilterParam::LEVEL, 10);

// Set video filter parameter.

pipeline->setParam(VPipelineModule::VIDEO_FILTER_3, (int)VFilterParam::TYPE, 1);

// Set video filter parameter.

pipeline->setParam(VPipelineModule::VIDEO_FILTER_4, (int)VFilterParam::LEVEL, 50);

// Set video filter parameter.

pipeline->setParam(VPipelineModule::VIDEO_FILTER_5, (int)VFilterParam::CUSTOM_1, 1.5);

// Set object detector parameter.

pipeline->setParam(VPipelineModule::OBJECT_DETECTOR_1, (int)ObjectDetectorParam::SCALE_FACTOR, 2);

// Set object detector parameter.

pipeline->setParam(VPipelineModule::OBJECT_DETECTOR_2, (int)ObjectDetectorParam::MIN_X_SPEED, 1.5);

// Set object detector parameter.

pipeline->setParam(VPipelineModule::OBJECT_DETECTOR_3, (int)ObjectDetectorParam::MIN_Y_SPEED, 2.5);

// Set object detector parameter.

pipeline->setParam(VPipelineModule::OBJECT_DETECTOR_4, (int)ObjectDetectorParam::MIN_Y_SPEED, 1.5);

// Set general pipeline parameter.

pipeline->setParam(VPipelineModule::GENERAL, (int)GeneralParam::LOG_MODE, 1);

executeCommand method

The executeCommand(…) method performs action command for particular video processing pipeline module. User must specify functional module of pipeline to execute command. The method will check module type and will call executeCommand(…) method of appropriate module (functional module object). Method declaration:

bool executeCommand(VPipelineModule module,

int id,

float arg1 = -1.0f,

float arg2 = -1.0f,

float arg3 = -1.0f);

| Parameter | Description |

|---|---|

| module | Functional module of pipeline according to VPipelineModule enum. Commands available for all functional modules. |

| id | Action command ID. Command ID depends on functional module. See example bellow. Appropriate enum must be converted to int value. Commands available for all modules: VSourceCommand - Video source action commands enum. Described in VSource interface class. LensCommand - Lens action commands enum. Described in Lens interface class. CameraCommand - Camera action commands enum. Described in Camera interface class. VTrackerCommand - Video tracker action commands enum. Described in VTracker interface class. VStabiliserCommand - Video stabilizer action commands enum. Described in VStabiliser interface class. ObjectDetectorCommand - Object detector commands enum. Described in ObjectDetector interface class. VFilterCommand - Video filter action commands enum. Described in VFilter interface class. GeneralCommand - General pipeline action commands enum. Described in VPipeline.h file. |

| arg1 | First command argument. Depends on command ID and functional module. To check valid arguments values user should check description of appropriate enum for particular implementation. |

| arg2 | Second command argument. Depends on command ID and functional module. To check valid arguments values user should check description of appropriate enum for particular implementation. |

| arg3 | Third command argument. Depends on command ID and functional module. To check valid arguments values user should check description of appropriate enum for particular implementation. |

Returns: TRUE if the command executed (accepted by pipeline) or FALSE if not.

Example how to execute command for different video processing pipeline modules:

// Execute video source command.

pipeline->executeCommand(VPipelineModule::VIDEO_SOURCE, (int)VSourceCommand::RESTART);

// Execute lens command.

pipeline->executeCommand(VPipelineModule::LENS, (int)LensCommand::ZOOM_TO_POS, 1000);

// Execute camera command.

pipeline->executeCommand(VPipelineModule::CAMERA, (int)CameraCommand::FREEZE);

// Execute video tracker command.

pipeline->executeCommand(VPipelineModule::VIDEO_TRACKER, (int)VTrackerCommand::CAPTURE, 100, 50, -1);

// Execute video stabilizer command.

pipeline->executeCommand(VPipelineModule::VIDEO_STABILISER, (int)VStabiliserCommand::RESET);

// Execute video filter command.

pipeline->executeCommand(VPipelineModule::VIDEO_FILTER_1, (int)VFilterCommand::ON);

// Execute video filter command.

pipeline->executeCommand(VPipelineModule::VIDEO_FILTER_2, (int)VFilterCommand::OFF);

// Execute video filter command.

pipeline->executeCommand(VPipelineModule::VIDEO_FILTER_3, (int)VFilterCommand::RESET);

// Execute video filter command.

pipeline->executeCommand(VPipelineModule::VIDEO_FILTER_4, (int)VFilterCommand::ON);

// Execute video filter command.

pipeline->executeCommand(VPipelineModule::VIDEO_FILTER_5, (int)VFilterCommand::ON);

// Execute object detector command.

pipeline->executeCommand(VPipelineModule::OBJECT_DETECTOR_1, (int)ObjectDetectorCommand::ON);

// Execute object detector command.

pipeline->executeCommand(VPipelineModule::OBJECT_DETECTOR_2, (int)ObjectDetectorCommand::OFF);

// Execute object detector command.

pipeline->executeCommand(VPipelineModule::OBJECT_DETECTOR_3, (int)ObjectDetectorCommand::RESET);

// Execute object detector command.

pipeline->executeCommand(VPipelineModule::OBJECT_DETECTOR_4, (int)ObjectDetectorCommand::RESET);

getFrame method

The getFrame(…) method returns the current video frame captured from the video source after its processing by sixth video filter. The pipeline can be enabled / disabled. If pipeline disabled the video source still works and the getFrame(…) method will return frame after video source module without processing by any functional module. Method declaration:

bool getFrame(cr::video::Frame& frame, int timeoutMsec = 0);

| Parameter | Description |

|---|---|

| frame | Reference to Frame object. The method will return the video frame if YUV24 format (YUV 24 bits). |

| timeoutMsec | Timeout to wait new frame, milliseconds. Values: <0 - Waiting endlessly for a new video frame to arrive. 0 - Just check if there is new video frame to return. If not, the method will return FALSE immediately. >0 - Wait for a new video frame a certain timeout. If there is no new frame for the specified timeout the method will return FALSE. |

Returns: TRUE if a new frame is returned or FALSE if not.

setMask method

The setMask(…) method sets detection or filtering mask to object detectors or video filters in pipeline. The method will check module type and will call setMask(…) method of appropriate module (ObjectDetector or VFilter object). To set mask the particular object detector or video filter must be initialized in initVPipeline(…) method and must support this capability. If user didn’t set particular object detector or video filter in initVPipeline(…) method the pipeline will initialize “dummy” module which doesn’t support mask (method setMask(…) will return FALSE). The method accepts mask in form of video frame which allows user to set mask of any configuration (not only rectangular). Method declaration:

bool setMask(VPipelineModule module, cr::video::Frame mask);

| Parameter | Description |

|---|---|

| module | Functional module of pipeline according to VPipelineModule enum. This function available only for modules: VPipelineModule::OBJECT_DETECTOR_0 VPipelineModule::OBJECT_DETECTOR_1 VPipelineModule::OBJECT_DETECTOR_2 VPipelineModule::OBJECT_DETECTOR_3 VPipelineModule::OBJECT_DETECTOR_4 VPipelineModule::OBJECT_DETECTOR_5 VPipelineModule::OBJECT_DETECTOR_6 VPipelineModule::VIDEO_FILTER_0 VPipelineModule::VIDEO_FILTER_1 VPipelineModule::VIDEO_FILTER_2 VPipelineModule::VIDEO_FILTER_3 VPipelineModule::VIDEO_FILTER_4 VPipelineModule::VIDEO_FILTER_5 VPipelineModule::VIDEO_FILTER_6 |

| mask | Reference to Frame object which represents detection mask. Detection mask is an image with possible pixel formats: GRAY, NV12, NV21, YU12 and YV12 (Fourcc enum described in Frame library). Recommended GRAY pixel format (most common for all modules). The image can have any resolution. If detection mask resolution is different than input video frames for particular module (object detector or video filter) the particular module will rescale to original input image resolution for processing. If particular object detector or video filter doesn’t support mask the method will return FALSE. |

Returns: TRUE if a the detection or filtering mask is set (accepted by pipeline) or FALSE if not.

Example how to prepare rectangular mask with OpenCV and set it to different modules:

// Create mask with any resolution. It will be rescaled in pipeline.

Frame mask(256, 256, Fourcc::GRAY);

cv::Mat maskImg(256, 256, CV_8UC1, mask.data);

cv::rectangle(maskImg, cv::Point(50, 50), cv::Point(100, 100),

cv::Scalar(255, 255, 255), cv::FILLED);

// Set mask to all modules which support it.

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_0, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_1, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_2, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_3, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_4, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_5, mask);

pipeline->setMask(VPipelineModule::OBJECT_DETECTOR_6, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_0, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_1, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_2, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_3, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_4, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_5, mask);

pipeline->setMask(VPipelineModule::VIDEO_FILTER_6, mask);

encodeSetParamCommand static method

The encodeSetParamCommand(…) static method encodes command to change any parameters of remote video processing pipeline. To control a pipeline remotely, the developer has to develop his own protocol and according to it encode the command and deliver it over the communication channel. To simplify this, the VPipeline class contains static methods for encoding the control commands. The VPipeline class provides two types of commands: a parameter change command (SET_PARAM) and an action command (COMMAND). encodeSetParamCommand(…) designed to encode SET_PARAM command. To encode command user doesn’t need initialize pipeline. Method declaration:

static void encodeSetParamCommand(uint8_t* data,

int& size,

VPipelineModule module,

int id,

float value);

| Parameter | Description |

|---|---|

| data | Pointer to data buffer for encoded command. Must have size >= 32 bytes. |

| size | Size of encoded data (command). Will be minimum 15 bytes. |

| module | Functional module of pipeline according to VPipelineModule enum. Commands available for all functional modules. |

| id | Parameter ID. Parameter ID depends on functional module. See example bellow. Appropriate enum must be converted to int value. Encoding SET_PARAMS command available for all functional modules: VSourceParam - Video source parameters enum. Described in VSource interface class. LensParam - Lens parameters enum. Described in Lens interface class. CameraParam - Camera parameters enum. Described in Camera interface class. VTrackerParam - Video tracker parameters enum. Described in VTracker interface class. VStabiliserParam - Video stabilizer parameters enum. Described in VStabiliser interface class. VFilterParam - Video filter parameters enum. Described in VFilter interface class. GeneralParam - General pipeline parameters enum. Described in VPipeline.h file. ObjectDetectorParam - Object detector parameters enum. Described in ObjectDetector interface class. |

| value | Parameter value. Depends on parameter ID and functional module. To check valid parameters values user should check description of appropriate enum. |

Example how to encode SET_PARAM command for different video processing pipeline modules:

uint8_t buffer[32];

int size = 0;

// Encode video source parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_SOURCE, (int)VSourceParam::FOCUS_MODE, 0);

// Encode lens parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::LENS, (int)LensParam::FOCUS_POS, 1000);

// Encode camera parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::CAMERA, (int)CameraParam::BRIGHTNESS, 100);

// Encode video tracker parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_TRACKER, (int)VTrackerParam::NUM_CHANNELS, 3);

// Encode video stabilizer parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_STABILISER, (int)VStabiliserParam::TYPE, 1);

// Encode video filter parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_FILTER_1, (int)VFilterParam::LEVEL, 1.2);

// Encode video filter parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_FILTER_2, (int)VFilterParam::LEVEL, 10.5);

// Encode video filter parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_FILTER_3, (int)VFilterParam::TYPE, 2);

// Encode video filter parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_FILTER_4, (int)VFilterParam::LEVEL, 21.6);

// Encode video filter parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::VIDEO_FILTER_5, (int)VFilterParam::TYPE, 0);

// Encode object detector parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_1, (int)ObjectDetectorParam::SCALE_FACTOR, 2);

// Encode object detector parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_2, (int)ObjectDetectorParam::MIN_X_SPEED, 1.5);

// Encode object detector parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_3, (int)ObjectDetectorParam::MIN_Y_SPEED, 2.5);

// Encode object detector parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_2, (int)ObjectDetectorParam::MIN_X_SPEED, 2.5);

// Encode general pipeline parameter.

VPipeline::encodeSetParamCommand(buffer, size, VPipelineModule::GENERAL, (int)GeneralParam::LOG_MODE, 1);

encodeCommand static method

The encodeCommand(…) static method encodes action command for remote video processing pipeline. To control a pipeline remotely, the developer has to develop his own protocol and according to it encode the command and deliver it over the communication channel. To simplify this, the VPipeline class contains static methods for encoding the control commands. The VPipeline class provides two types of commands: a parameter change command (SET_PARAM) and an action command (COMMAND). encodeCommand(…) designed to encode COMMAND command (action command). To encode command user doesn’t need initialize pipeline. Method declaration:

static void encodeCommand(uint8_t* data,

int& size,

VPipelineModule module,

int id,

float arg1 = 0.0f,

float arg2 = 0.0f,

float arg3 = 0.0f);

| Parameter | Description |

|---|---|

| data | Pointer to data buffer for encoded command. Must have size >= 32 bytes. |

| size | Size of encoded data (command). Will be minimum 10 bytes. |

| module | Functional module of pipeline according to VPipelineModule enum. Commands available for all functional modules. |

| id | Action command ID. Command ID depends on functional module. See example bellow. Appropriate enum must be converted to int value. Encoding COMMAND available for all modules: VSourceCommand - Video source action commands enum. Described in VSource interface class. LensCommand - Lens action commands enum. Described in Lens interface class. CameraCommand - Camera action commands enum. Described in Camera interface class. VTrackerCommand - Video tracker action commands enum. Described in VTracker interface class. VStabiliserCommand - Video stabilizer action commands enum. Described in VStabiliser interface class. ObjectDetectorCommand - Object detector commands enum. Described in ObjectDetector interface class. VFilterCommand - Video filter action commands enum. Described in VFilter interface class. GeneralCommand - General pipeline action commands enum. Described in VPipeline.h file. |

| arg1 | First command argument. Depends on command ID and functional module. To check valid arguments values user should check description of appropriate enum of particular implementation. |

| arg2 | Second command argument. Available only for video tracker module. Value depends on command ID. To check valid arguments values user should check description of appropriate enum of particular video tracker implementation. |

| arg3 | Third command argument. Available only for video tracker module. Value depends on command ID. To check valid arguments values user should check description of appropriate enum of particular video tracker implementation. |

Example how to encode COMMAND command for different video processing pipeline modules:

uint8_t buffer[32];

int size = 0;

// Encode video source action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_SOURCE, (int)VSourceCommand::RESTART);

// Encode lens action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::LENS, (int)LensCommand::ZOOM_TO_POS, 1000);

// Encode camera action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::CAMERA, (int)CameraCommand::FREEZE);

// Encode video tracker action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_TRACKER, (int)VTrackerCommand::CAPTURE, 100, 50, -1);

// Encode video stabilizer action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_STABILISER, (int)VStabiliserCommand::RESET);

// Encode video filter action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_FILTER_1, (int)VFilterCommand::ON);

// Encode video filter action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_FILTER_2, (int)VFilterCommand::OFF);

// Encode video filter action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_FILTER_3, (int)VFilterCommand::RESET);

// Encode video filter action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_FILTER_4, (int)VFilterCommand::RESET);

// Encode video filter action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::VIDEO_FILTER_5, (int)VFilterCommand::ON);

// Encode object detector action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_1, (int)ObjectDetectorCommand::ON);

// Encode object detector action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_2, (int)ObjectDetectorCommand::OFF);

// Encode object detector action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_3, (int)ObjectDetectorCommand::RESET);

// Encode object detector action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::OBJECT_DETECTOR_4, (int)ObjectDetectorCommand::RESET);

// Encode general pipeline action command.

VPipeline::encodeCommand(buffer, size, VPipelineModule::GENERAL, (int)GeneralCommand::ON);

decodeCommand static method

The decodeCommand(…) static method decodes commands on pipeline side encoded by encodeCommand(…) and encodeSetParamCommand(…) methods. To control a pipeline remotely, the developer has to develop his own protocol and according to it encode the command and deliver it over the communication channel. To simplify this, the VPipeline class contains static methods for encoding the control commands. The VPipeline class provides two types of commands: a parameter change command (SET_PARAM) and an action command (COMMAND). The decodeCommand(…) method designed to decode both input command types on pipeline side (edge device). This method is usually used if you want to intercept specific control commands. If it is not necessary to intercept specific commands, it is more convenient to use decodeAndExecuteCommand(…) method. Method declaration:

static int decodeCommand(uint8_t* data,

int size,

VPipelineModule& module,

int& id,

float& value1,

float& value2,

float& value3);

| Parameter | Description |

|---|---|

| data | Pointer to input command. |

| size | Size of input command. |

| module | The pipeline module for which the command is intended. |

| id | Action commands ID or parameter ID. It can be different command and parameters structure. The method converts it to int. |

| value1 | Decoded parameters value in case decoding SET_PARAM command or first command argument in case decoding action command (COMMAND). |

| value2 | Second command argument in case decoding action command (COMMAND). Will be available only for VPipelineModule::VIDEO_TRACKER. |

| value3 | Second command argument in case decoding action command (COMMAND). Will be available only for VPipelineModule::VIDEO_TRACKER. |

Returns: 0 - in case decoding COMMAND, 1 - in case decoding SET_PARAM command or -1 in case errors.

Example how to decode command:

// Decode command.

VPipelineModule module;

int id;

float value1, value2, value3;

int res = VPipeline::decodeCommand(data, size, module, id, value1, value2, value3);

if (res == 0) // COMMAND

return pipeline->executeCommand(module, id, value1, value2, value3);

else if (res == 1) // SET_PARAM

return pipeline->setParam(module, id, value1);

else // ERROR

return false;

decodeAndExecuteCommand method

The decodeAndExecuteCommand(…) method decodes commands encoded by encodeSetParamCommand(…) and encodeCommand(…) methods using decodeCommand(…) method and calls setParam(…) or executeCommand(…) methods. This method used to simplify remote pipeline control. User just need to put input command data to pipeline. Method declaration:

bool decodeAndExecuteCommand(uint8_t* data, int size);

| Parameter | Description |

|---|---|

| data | Pointer to input command. |

| size | Size of input command. |

Returns: TRUE if command decoded and executed or FALSE if not.

Implementation of the method inside pipeline which explaing principle of the method:

bool VPipeline::decodeAndExecuteCommand(uint8_t* data, int size)

{

// Decode command.

VPipelineModule module = VPipelineModule::VIDEO_SOURCE;

int id = 0; float arg1 = -1.0f; float arg2 = -1.0f; float arg3 = -1.0f;

int ret = VPipeline::decodeCommand(data, size, module, id, arg1, arg2, arg3);

// Set param or execute command.

if (ret == 1)

return VPipeline::setParam(module, id, arg1);

else if (ret == 0)

return VPipeline::executeCommand(module, id, arg1, arg2, arg3);

return false;

}

Enums

This section lists the C++ enums declared in the VPipeline.h file. Other C++ enums used in methods of VPipeline class are declared in libraries: Camera, Lens, ObjectDetector, VSource, VTracker, VFilter and VStabiliser.

VPipelineModule enum

The VPipelineModule enum declared in VPipeline.h file and lists pipeline functional modules. Enum declaration:

enum class VPipelineModule

{

/// Video source.

VIDEO_SOURCE = 1,

/// Lens.

LENS,

/// Camera.

CAMERA,

/// Video tracker.

VIDEO_TRACKER,

/// Video stabilizer.

VIDEO_STABILISER,

/// Video filter 0.

VIDEO_FILTER_0,

/// Video filter 1.

VIDEO_FILTER_1,

/// Video filter 2.

VIDEO_FILTER_2,

/// Video filter 3.

VIDEO_FILTER_3,

/// Video filter 4.

VIDEO_FILTER_4,

/// Video filter 5.

VIDEO_FILTER_5,

/// Video filter 6.

VIDEO_FILTER_6,

/// Object detector 0.

OBJECT_DETECTOR_0,

/// Object detector 1.

OBJECT_DETECTOR_1,

/// Object detector 2.

OBJECT_DETECTOR_2,

/// Object detector 3.

OBJECT_DETECTOR_3,

/// Object detector 4.

OBJECT_DETECTOR_4,

/// Object detector 5.

OBJECT_DETECTOR_5,

/// Object detector 6.

OBJECT_DETECTOR_6,

/// General.

GENERAL

};

Table 2 - VPipelineModule enum values.

| Value | Description |

|---|---|

| VIDEO_SOURCE | Video source module represented by VSource interface class. |

| LENS | Lens controller module represented by Lens interface class. |

| CAMERA | Camera controller module represented by Camera interface class. |

| VIDEO_TRACKER | Video tracker module represented by VTracker interface class. |

| VIDEO_STABILISER | Video stabilizer module represented by VStabiliser interface class. |

| VIDEO_FILTER_0 | Video filter 0 module represented by VFilter interface class. |

| VIDEO_FILTER_1 | Video filter 1 module represented by VFilter interface class. |

| VIDEO_FILTER_2 | Video filter 2 module represented by VFilter interface class. |

| VIDEO_FILTER_3 | Video filter 3 module represented by VFilter interface class. |

| VIDEO_FILTER_4 | Video filter 4 module represented by VFilter interface class. |

| VIDEO_FILTER_5 | Video filter 5 module represented by VFilter interface class. |

| VIDEO_FILTER_6 | Video filter 6 module represented by VFilter interface class. |

| OBJECT_DETECTOR_0 | Object detector 0 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_1 | Object detector 1 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_2 | Object detector 2 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_3 | Object detector 3 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_4 | Object detector 4 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_5 | Object detector 5 module represented by ObjectDetector interface class. |

| OBJECT_DETECTOR_6 | Object detector 6 module represented by ObjectDetector interface class. |

| GENERAL | General module (general pipeline params). |

GeneralParam enum

The GeneralParam enum declared in VPipeline.h file and lists general pipeline parameters IDs. Enum declaration:

enum class GeneralParam

{

/// Logging mode. Values: 0 - Disable, 1 - Only file,

/// 2 - Only terminal (console), 3 - File and terminal (console).

LOG_MODE = 1,

/// Mode: 0 - disable, 1 - enable.

MODE,

/// Tracker centering mode: 0 - disable, 1 - enable.

TRACKER_CENTERING_ENABLE_MODE,

/// Tracker centering current offset X.

TRACKER_CENTERING_MAX_OFFSET_X,

/// Tracker centering current offset Y.

TRACKER_CENTERING_MAX_OFFSET_Y

};

Table 3 - GeneralParam enum values.

| Value | Description |

|---|---|

| LOG_MODE | Logging mode: 0 - Disable (default value). 1 - Only file. 2 - Only terminal (console). 3 - File and terminal (console). The VPipeline library uses Logger library for printing logs into console and(or) file. Note: to enable printing logs in files for all modules user have to initialize it by using static method setSaveLogParams(…) of Logger library. This parameter used only for pipeline and doesn’t set logging mode for particular functional modules. To set logging mode for particular functional modules use appropriate parameters enums. |

| MODE | Video processing pipeline mode: 0 - disabled, 1 - enabled. If pipeline is disabled, it should not perform any processing. If the pipeline is switched on again, processing must be resumed. |

| TRACKER_CENTERING_ENABLE_MODE | Video tracking centering mode: 0 - disabled, 1 - enabled. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode). |

| TRACKER_CENTERING_MAX_OFFSET_X | Maximum image horizontal offset (pixels) for video tracking centering mode. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode) but offset can’t be more than maximum offset (the image will be moved according to maximum allowed offset). |

| TRACKER_CENTERING_MAX_OFFSET_Y | Maximum image vertical offset (pixels) for video tracking centering mode. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode) but offset can’t be more than maximum offset (the image will be moved according to maximum allowed offset). |

GeneralCommand enum

The GeneralParam enum declared in VPipeline.h file and lists general pipeline action commands IDs. Enum declaration:

enum class GeneralCommand

{

/// Enable video processing pipeline.

ON = 1,

/// Disable video processing pipeline.

OFF

};

Table 4 - GeneralCommand enum values.

| Value | Description |

|---|---|

| ON | Enable pipeline. If the pipeline is already enabled this command will do nothing. If the pipeline is disabled this command will start video processing. Even if the pipeline is disabled use can get video from video source without processing by any functional modules of the pipeline. |

| OFF | Disable pipeline. If the pipeline is already disabled this command will do nothing. If the pipeline is enabled this command will exclude all functional modules from video processing excepts video source. Even if the pipeline is disabled use can get video from video source without processing by any functional modules of the pipeline. |

VPipelineParams class description

VPipelineParams class used for pipeline initialization (initVPipeline(…) method) or to get all actual params (getParams(…) method) including detection and video tracking results. Also VPipelineParams provide structure to write/read params from JSON files (JSON_READABLE macro) and provide methods to encode (serialize) and decode (deserialize) params.

VPipelineParams class declaration

The VPipeline.h file contains VPipelineParams class declaration. Class declaration:

class VPipelineParams

{

public:

/// General params.

class GeneralParams

{

public:

/// Logging mode. Values: 0 - Disable, 1 - Only file,

/// 2 - Only terminal (console), 3 - File and terminal (console).

int logMode{0};

/// Main cycle processing time.

int mainProcessingTimeUs{0};

/// Enable.

bool enable{true};

/// Tracker centering enable.

bool trackerCenteringEnable{ false };

/// Tracker centering current offset X.

int trackerCenteringOffsetX{ 0 };

/// Tracker centering current offset Y.

int trackerCenteringOffsetY{ 0 };

/// Tracker centering max offset X.

int trackerCenteringMaxOffsetX{ 0 };

/// Tracker centering max offset Y.

int trackerCenteringMaxOffsetY{ 0 };

JSON_READABLE(GeneralParams, logMode, enable, trackerCenteringEnable,

trackerCenteringMaxOffsetX, trackerCenteringMaxOffsetY)

};

/// Video source params.

cr::video::VSourceParams videoSource;

/// Video stabilizer params.

cr::vstab::VStabiliserParams videoStabiliser;

/// Video tracker params.

cr::vtracker::VTrackerParams videoTracker;

/// Object detector 0 params.

cr::detector::ObjectDetectorParams objectDetector0;

/// Object detector 1 params.

cr::detector::ObjectDetectorParams objectDetector1;

/// Object detector 2 params.

cr::detector::ObjectDetectorParams objectDetector2;

/// Object detector 3 params.

cr::detector::ObjectDetectorParams objectDetector3;

/// Object detector 4 params.

cr::detector::ObjectDetectorParams objectDetector4;

/// Object detector 5 params.

cr::detector::ObjectDetectorParams objectDetector5;

/// Object detector 6 params.

cr::detector::ObjectDetectorParams objectDetector6;

/// Lens params.

cr::lens::LensParams lens;

/// Camera params.

cr::camera::CameraParams camera;

/// Video filter 0 params.

cr::video::VFilterParams videoFilter0;

/// Video filter 1 params.

cr::video::VFilterParams videoFilter1;

/// Video filter 2 params.

cr::video::VFilterParams videoFilter2;

/// Video filter 3 params.

cr::video::VFilterParams videoFilter3;

/// Video filter 4 params.

cr::video::VFilterParams videoFilter4;

/// Video filter 5 params.

cr::video::VFilterParams videoFilter5;

/// Video filter 6 params.

cr::video::VFilterParams videoFilter6;

/// General params.

GeneralParams general;

JSON_READABLE(VPipelineParams, videoSource, videoStabiliser, videoTracker,

objectDetector0, objectDetector1, objectDetector2,

objectDetector3, objectDetector4, objectDetector5,

objectDetector6, lens, camera, videoFilter0, videoFilter1,

videoFilter2, videoFilter3, videoFilter4, videoFilter5,

videoFilter6, general)

/**

* @brief Encode params (serialize).

* @param data Pointer to data buffer.

* @param bufferSize Data buffer size.

* @param size Size of data.

* @param mask Pointer to parameters mask.

* @return TRUE if params encoded (serialized) or FALSE if not.

*/

bool encode(uint8_t* data, int bufferSize, int& size,

VPipelineParamsMask* mask = nullptr);

/**

* @brief Decode params (deserialize).

* @param data Pointer to data.

* @param dataSize Data size.

* @return TRUE is params decoded or FALSE if not.

*/

bool decode(uint8_t* data, int dataSize);

};

Table 5 - GeneralParams class fields description.

| Field | type | Description |

|---|---|---|

| logMode | int | Logging mode: 0 - Disable (default value). 1 - Only file. 2 - Only terminal (console). 3 - File and terminal (console). The VPipeline library uses Logger library for printing logs into console and(or) file. Note: to enable printing logs in files user have to initialize it by using static method setSaveLogParams(…) of Logger library. This parameter used only for pipeline and doesn’t set logging mode for particular functional modules. To set logging mode for particular functional modules use appropriate parameters enums. |

| enable | bool | Video processing pipeline disable / enable. If pipeline is disabled, it will not perform any processing. Even if the pipeline is disabled use can get video from video source without processing by any functional modules of the pipeline. |

| mainProcessingTimeUs | int | Main video processing cycle time. Required to control pipeline performance. |

| trackerCenteringEnable | bool | Video tracker centering disable / enable. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode). |

| trackerCenteringOffsetX | int | Current horizontal image offset for video tracker centering mode. If video tracker centering mode is disabled the value will be 0. |

| trackerCenteringOffsetY | int | Current vertical image offset for video tracker centering mode. If video tracker centering mode is disabled the value will be 0. |

| trackerCenteringMaxOffsetX | int | Maximum image horizontal offset (pixels) for video tracking centering mode. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode) but offset can’t be more than maximum offset (the image will be moved according to maximum allowed offset). |

| trackerCenteringMaxOffsetY | int | Maximum image vertical offset (pixels) for video tracking centering mode. If video tracking centering mode enabled and if video tracker in TRACKING mode (mode == 1) the pipeline will move image to keep tracking rectangle in the center of image (see Video tracker centering mode) but offset can’t be more than maximum offset (the image will be moved according to maximum allowed offset). |

Other parameters: VSourceParams - Video source action commands enum. Described in VSource interface class.

LensParams - Lens action commands enum. Described in Lens interface class.

CameraParams - Camera action commands enum. Described in Camera interface class.

VTrackerParams - Video tracker action commands enum. Described in VTracker interface class.

VStabiliserParams - Video stabilizer action commands enum. Described in VStabiliser interface class.

ObjectDetectorParams - Object detector commands enum. Described in ObjectDetector interface class.

VFilterParams - Video filter action commands enum. Described in VFilter interface class.

Serialize params

The VPipelineParams class provides method encode(…) to serialize pipeline params (fields of VPipelineParams class). Serialization of params necessary in case when you need to send params via communication channels. Method provide options to exclude particular parameters from serialization. To do this method inserts binary mask into encoded data where each bit represents particular parameter and decode(…) method recognizes it. Method declaration:

bool encode(uint8_t* data, int bufferSize, int& size,

VPipelineParamsMask* mask = nullptr);

| Parameter | Value |

|---|---|

| data | Pointer to data buffer. |

| size | Size of encoded data. |

| mask | Parameters mask - pointer to VPipelineParamsMask structure. VPipelineParamsMask (declared in VPipeline.h file) determines flags for each field (parameter) declared in VPipelineParams class. If the user wants to exclude a particular parameter from serialization, he should set the corresponding FALSE flag in the VPipelineParamsMask structure and put pointer to method call. VPipelineParamsMask includes additional mask structures: VSourceParamsMask (declared in VSource interface class), VStabiliserParamsMask (declared in VStabiliser interface class), VTrackerParamsMask (declared in VTracker interface class), ObjectDetectorParamsMask (declared in ObjectDetector interface class), LensParamsMask (declared in Lens interface class), VFilterParamsMask (declared in VFilter interface class), CameraParamsMask (declared in Camera interface class) and GeneralParamsMask (declared in VPipeline.h file). |

Returns: TRUE if params serialized or FALSE if not (bufferSize not enough for serialized data).

VPipelineParamsMask and additional structures declaration:

struct GeneralParamsMask

{

bool logMode{ true };

bool mainProcessingTimeUs{ true };

bool enable{ true };

bool trackerCenteringEnable{ true };

bool trackerCenteringOffsetX{ true };

bool trackerCenteringOffsetY{ true };

bool trackerCenteringMaxOffsetX{ true };

bool trackerCenteringMaxOffsetY{ true };

};

struct VPipelineParamsMask

{

cr::video::VSourceParamsMask videoSource;

cr::vstab::VStabiliserParamsMask videoStabiliser;

cr::vtracker::VTrackerParamsMask videoTracker;

cr::detector::ObjectDetectorParamsMask objectDetector0;

cr::detector::ObjectDetectorParamsMask objectDetector1;

cr::detector::ObjectDetectorParamsMask objectDetector2;

cr::detector::ObjectDetectorParamsMask objectDetector3;

cr::detector::ObjectDetectorParamsMask objectDetector4;

cr::detector::ObjectDetectorParamsMask objectDetector5;

cr::detector::ObjectDetectorParamsMask objectDetector6;

cr::lens::LensParamsMask lens;

cr::camera::CameraParamsMask camera;

cr::video::VFilterParamsMask videoFilter0;

cr::video::VFilterParamsMask videoFilter1;

cr::video::VFilterParamsMask videoFilter2;

cr::video::VFilterParamsMask videoFilter3;

cr::video::VFilterParamsMask videoFilter4;

cr::video::VFilterParamsMask videoFilter5;

cr::video::VFilterParamsMask videoFilter6;

GeneralParamsMask general;

};

Parameters serialization example without parameters mask:

// Create params object.

VPipelineParams in;

// Encode params.

uint8_t data[8192];

int size = 0;

if (!in.encode(data, 8192, size))

cout << "ERROR: Can't encode params" << endl;

cout << "Encoded data size: " << size << " bytes" << endl;

Parameters serialization example with parameters mask:

// Fill params mask.

VPipelineParamsMask mask;

// Exclude some lens params from serialization.

mask.lens.zoomPos = false;

// Exclude some camera params from serialization.

mask.camera.width = false;

mask.camera.height = false;

// Exclude some video source params from serialization.

mask.videoSource.logLevel = false;

mask.videoSource.cycleTimeMks = false;

// Exclude some video stabilizer params from serialization.

mask.videoStabiliser.scaleFactor = false;

mask.videoStabiliser.xOffsetLimit = false;

// Exclude some video tracker params from serialization.

mask.videoTracker.mode = false;

mask.videoTracker.rectX = false;

mask.videoTracker.rectY = false;

// Exclude some first object detector params from serialization.

mask.objectDetector1.logMode = false;

mask.objectDetector1.frameBufferSize = false;

// Exclude some second object detector params from serialization.

mask.objectDetector2.logMode = false;

mask.objectDetector2.frameBufferSize = false;

// Exclude some third object detector params from serialization.

mask.objectDetector3.logMode = false;

mask.objectDetector3.frameBufferSize = false;

// Exclude some others params from serialization.

mask.videoFilter2.enable = false;

mask.videoFilter3.level = false;

// Create params object.

VPipelineParams in;

// Encode params.

uint8_t data[8192];

int size = 0;

if (!in.encode(data, 8192, size, &mask))

return false;

cout << "Encoded data size: " << size << " bytes" << endl;

Deserialize params

The VPipelineParams class provides method decode(…) to deserialize params (fields of VPipelineParams class). Deserialization of params necessary in case when you need to receive params via communication channels. Method automatically recognizes which parameters were serialized by encode(…) method. Method declaration:

bool decode(uint8_t* data, int dataSize);

| Parameter | Value |

|---|---|

| data | Pointer to data buffer. |

| dataSize | Size of serialized data. |

Returns: TRUE if params deserialized or FALSE if not.

Example:

// Encode params.

VPipelineParams in;

uint8_t data[8192];

int size = 0;

if (!in.encode(data, 8192, size))

cout << "ERROR: Can't encode params" << endl;

cout << "Encoded data size: " << size << " bytes" << endl;

// Decode params.

VPipelineParams out;

if (!out.decode(data, size))

cout << "ERROR: Can't decode params" << endl;

Read params from JSON file and write to JSON file

The VPipeline library depends on ConfigReader library which provides method to read params from JSON file and to write params to JSON file. Example of writing and reading params to JSON file:

// Prepare params.

VPipelineParams in;

// Write params to file.

cr::utils::ConfigReader inConfig;

inConfig.set(in, "VPipelineParams");

inConfig.writeToFile("TestVPipelineParams.json");

// Read params from file.

cr::utils::ConfigReader outConfig;

if (!outConfig.readFromFile("TestVPipelineParams.json"))

cout << "Can't open config file" << endl;

// Parse JSON file to params structure.

VPipelineParams out;

if (!outConfig.get(out, "VPipelineParams"))

cout << "Can't read params from file" << endl;

TestVPipelineParams.json will look like (part of file):

{

"VPipelineParams": {

"camera": {

"agcMode": 243,

"alcGate": 119,

...

},

"general": {

"enable": true,

"logMode": 82,

"trackerCenteringEnable": true,

"trackerCenteringMaxOffsetX": 45,

"trackerCenteringMaxOffsetY": 197

},

"lens": {

"afHwSpeed": 221,

"afRange": 12,

"afRoiMode": 6,

...

},

"objectDetector0": {

"classNames": [

"0",

"1",

"2"

],

"custom1": 205.0,

"custom2": 225.0,

"custom3": 15.0,

"enable": false,

"frameBufferSize": 211,

...

},

"objectDetector1": {

...

},

"objectDetector2": {

...

},

"objectDetector3": {

...

},

"objectDetector4": {

...

},

"objectDetector5": {

...

},

"objectDetector6": {

...

},

"videoFilter0": {

"custom1": 142.0,

"custom2": 32.0,

"custom3": 127.0,

"level": 232.0,

"mode": 217,

"type": 145

},

"videoFilter1": {

...

},

"videoFilter2": {

...

},

"videoFilter3": {

...

},

"videoFilter4": {

...

},

"videoFilter5": {

...

},

"videoFilter6": {

...

},

"videoSource": {

"custom1": 211.0,

"custom2": 179.0,

"custom3": 25.0,

"exposureMode": 218,

"focusMode": 192,

...

},

"videoStabiliser": {

"aFilterCoeff": 251.0,

"aOffsetLimit": 144.0,

"backend": 99,

...

},

"videoTracker": {

"custom1": 100.0,

"custom2": 150.0,

...

}

}

}

Build and connect to your project

Typical commands to build VPipeline library:

cd VPipeline

mkdir build

cd build

cmake ..

make

If you want connect VPipeline library to your CMake project as source code you can make follow. For example, if your repository has structure:

CMakeLists.txt