VPipelineDemo application

An example of VPipeline C++ library usage

v1.1.0

Table of contents

- Overview

- Application versions

- Application files

- Build application

- Launch and user interface

- Control

- Prepare compiled library files

Overview

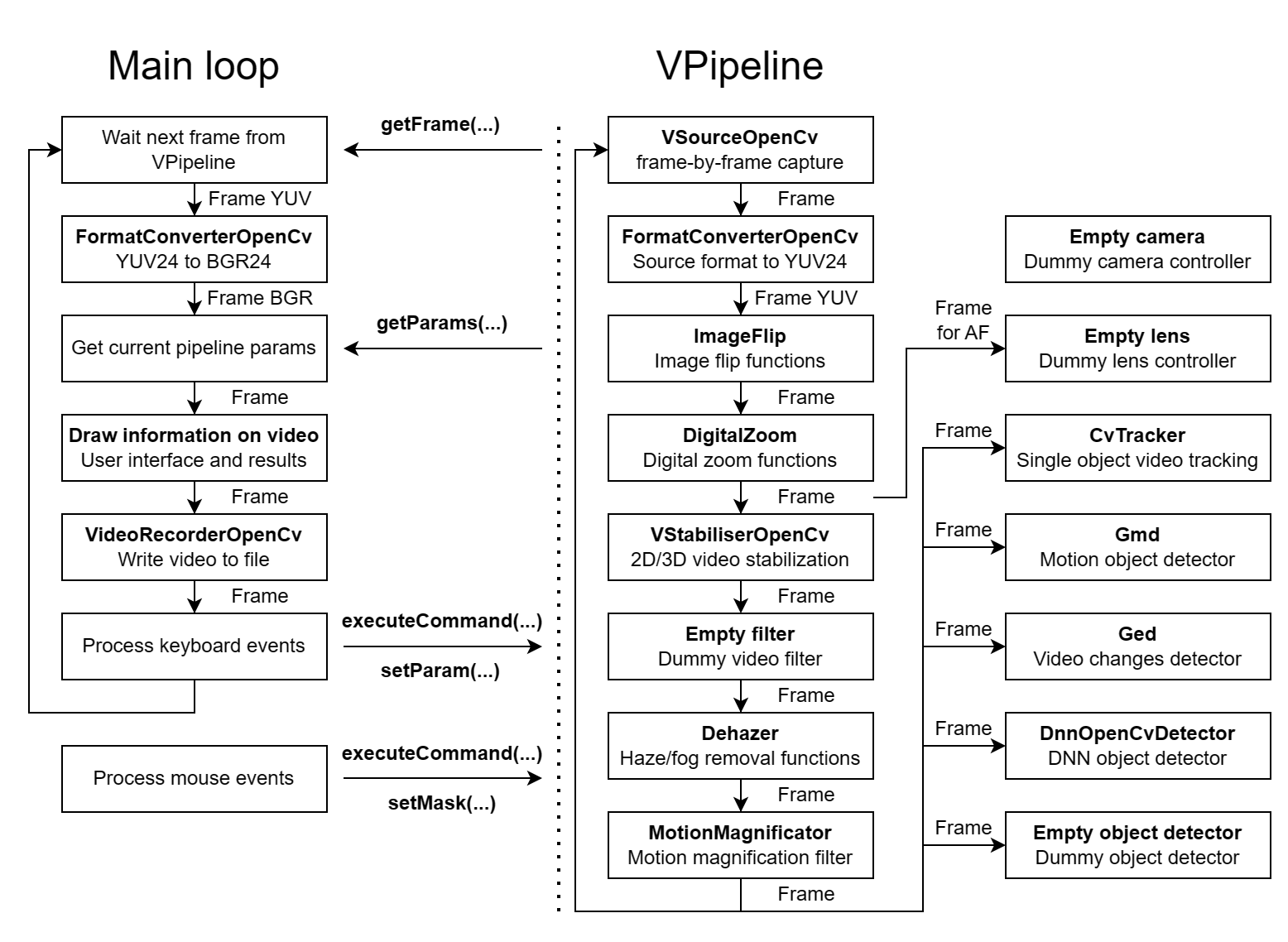

The VPipelineDemo is example of VPipeline library usage. The repository includes the source code of the application itself and source code of all the libraries required for it to work. The application shows how to initialize and use VPipeline library. The application (repository) is CMake project and can be used as template for your project. It provides VPipeline initialization, basic user interface based on OpenCV and control of all video processing pipeline modules (filters, video tracker, detectors). The application doesn’t provide camera and lens control. After start the application initializes VPipeline with libraries: VSourceOpenCv (as video source module), ImageFlip (as first video filter), DigitalZoom (as second video filter), VideoStabiliserOpenCv (as video stabilization module), Dehazer (as fourth video filter), MotionMagnificator (as fifth video filter), CvTracker (as video tracker module), Gmd (motion detector library as first object detector), Ged (video changes detector library as second object detector) and DnnOpenCvDetector (neural network object detector library as third objects detector). The application doesn’t initialize lens and camera controllers (dummy video and lens controllers will be initialized). Also the library doesn’t initialize third video filter (dummy video filer will be initialized) and fourth object detector (dummy object detector will be initialized). After initialization the application runs main loops. The application uses C++17 standard. Image bellow shows processing pipeline structure:

The application reads configuration params from VPipelineDemo.json file and initializes VPipeline. After initialization VPipeline will run internal processing loop which includes: video capture, image flip, digital zoom, video stabilization, defog / dehaze, motion magnification, video tracking (separate thread), motion detection (separate thread), video changes detection (separate thread) and DNN (neural network) detection (separate thread). User can chose file dialog to open video files using SimpleFileDialog library. Application main loop wait video frame from VPipeline library, convert YUV24 format (native format for video processing pipeline) to BGR24 (native format for OpenCV), obtains current params from VPipeline library (parameters of all modules and objects detection results), draws information on video, record video (using VideoRecorderOpenCv library), display video with information to user and process keyboard events (buttons push events). The application shows how to control each module of VPipeline library.

Application versions

Table 1 - Application versions.

| Version | Release date | What’s new |

|---|---|---|

| 1.0.0 | 07.05.2024 | First version. |

| 1.0.1 | 22.05.2024 | - Submodules updated. - Documentation updated. |

| 1.0.2 | 02.08.2024 | - Submodules updated. |

| 1.0.3 | 18.09.2024 | - CvTracker submodule updated. |

| 1.0.4 | 05.10.2024 | - Update submodules. |

| 1.0.5 | 04.12.2024 | - Update submodules. |

| 1.0.6 | 15.12.2024 | - Update submodules. |

| 1.0.7 | 02.02.2025 | - Update submodules. |

| 1.0.8 | 24.02.2025 | - Update submodules. |

| 1.0.9 | 18.03.2025 | - Update CvTracker submodule. |

| 1.0.10 | 03.04.2025 | - Multiple submodules update. |

| 1.0.11 | 27.04.2025 | - Multiple submodules update. |

| 1.0.12 | 15.05.2025 | - CvTracker submodule update. |

| 1.0.13 | 19.07.2025 | - CvTracker submodule update. - Gmd submodule update. |

| 1.0.14 | 10.08.2025 | - CvTracker submodule update. - Gmd submodule update. |

| 1.1.0 | 16.08.2025 | - CMake structure changed. |

Application files

The VPipelineDemo is provided as source code. Users are given a set of files in the form of a CMake project (repository). The repository structure is outlined below:

CMakeLists.txt ----------- Main CMake file of the application.

3rdparty ----------------- Folder with third-party libraries.

CMakeLists.txt ------- CMake file which includes third-party libraries.

CvTracker ------------ Folder with CvTracker library source code.

Dehazer -------------- Folder with Dehazer library source code.

DigitalZoom ---------- Folder with DigitalZoom library source code.

DnnOpenCvDetector ---- Folder with DnnOpenCvDetector library source code.

Ged ------------------ Folder with Ged library source code.

Gmd ------------------ Folder with Gmd library source code.

ImageFlip ------------ Folder with ImageFlip library source code.

MotionMagnificator --- Folder with MotionMagnificator library source code.

SimpleFileDialog ----- Folder with SimpleFileDialog library source code.

VideoRecorderOpenCv -- Folder with VideoRecorderOpenCv library source code.

VPipeline ------------ Folder with VPipeline library source code.

VSourceOpenCv -------- Folder with VSourceOpenCv library source code.

VStabiliserOpenCv ---- Folder with VStabiliserOpenCv library source code.

src ---------------------- Folder with source code of the library.

CMakeLists.txt ------- CMake file of the application.

main.cpp ------------- Source code file of the application.

Build application

The VPipelineDemo is complete repository in form of CMake project. The application and all included libraries depend only on OpenCV. Before compiling you have to install OpenCV library into your OS. Also to compile library you have to install CMake. To install dependency in Linux (Ubuntu) use command:

sudo apt-get install cmake libopencv-dev

Typical commands to build VPipelineDemo application (Release mode):

cd VPipelineDemo

mkdir build

cd build

cmake -DCMAKE_BUILD_TYPE=Release ..

make

Launch and user interface

After compiling you will get VPipelineDemo.exe executable file on Windows or VPipelineDemo executable file on Linux. To run application on Linux run command:

./VPipelineDemo

Note: on Windows you may need copy OpenCV dll files to application’s executable file folder. After start the application will create configuration file (if it doesn’t exist) VPipelineDemo.json with default VPipeline parameters. But it is recommended to copy configuration file (VPipelineDemo.json) and neural network file for DNN object detector (yolov7-tiny_640x640.onnx) from static folder of VPipelineDemo repository. Default contents of the configuration file:

{

"Params": {

"camera": {

"agcMode": 0,

"alcGate": 0,

"autoNucIntervalMsec": 0,

"blackAndWhiteFilterMode": 0,

"brightness": 0,

"brightnessMode": 0,

"changingLevel": 0.0,

"changingMode": 0,

"chromaLevel": 0,

"contrast": 0,

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"ddeLevel": 0.0,

"ddeMode": 0,

"defogMode": 0,

"dehazeMode": 0,

"detail": 0,

"digitalZoom": 0.0,

"digitalZoomMode": 0,

"displayMode": 0,

"exposureCompensationMode": 0,

"exposureCompensationPosition": 0,

"exposureMode": 0,

"exposureTime": 0,

"filterMode": 0,

"fps": 0.0,

"gain": 0,

"gainMode": 0,

"height": 0,

"imageFlip": 0,

"initString": "",

"isoSensitivity": 0,

"logMode": 0,

"noiseReductionMode": 0,

"nucMode": 0,

"palette": 0,

"profile": 0,

"roiX0": 0,

"roiX1": 0,

"roiY0": 0,

"roiY1": 0,

"sceneMode": 0,

"sensitivity": 0.0,

"sharpening": 0,

"sharpeningMode": 0,

"shutterMode": 0,

"shutterPos": 0,

"shutterSpeed": 0,

"stabilizationMode": 0,

"type": 0,

"videoOutput": 0,

"whiteBalanceArea": 0,

"whiteBalanceMode": 0,

"wideDynamicRangeMode": 0,

"width": 0

},

"general": {

"enable": true,

"logMode": 2

},

"lens": {

"afHwSpeed": 0,

"afRange": 0,

"afRoiMode": 0,

"afRoiX0": 0,

"afRoiX1": 0,

"afRoiY0": 0,

"afRoiY1": 0,

"autoAfRoiBorder": 0,

"autoAfRoiHeight": 0,

"autoAfRoiWidth": 0,

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"extenderMode": 0,

"filterMode": 0,

"focusFactorThreshold": 0.0,

"focusHwFarLimit": 0,

"focusHwMaxSpeed": 0,

"focusHwNearLimit": 0,

"focusMode": 0,

"fovPoints": [],

"initString": "",

"irisHwCloseLimit": 0,

"irisHwMaxSpeed": 0,

"irisHwOpenLimit": 0,

"irisMode": 0,

"logMode": 0,

"refocusTimeoutSec": 0,

"stabiliserMode": 0,

"type": 0,

"zoomHwMaxSpeed": 0,

"zoomHwTeleLimit": 0,

"zoomHwWideLimit": 0

},

"objectDetector1": {

"classNames": [

""

],

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"enable": true,

"frameBufferSize": 5,

"initString": "",

"logMode": 2,

"maxObjectHeight": 128,

"maxObjectWidth": 128,

"maxXSpeed": 30.0,

"maxYSpeed": 30.0,

"minDetectionProbability": 0.5,

"minObjectHeight": 4,

"minObjectWidth": 4,

"minXSpeed": 0.009999999776482582,

"minYSpeed": 0.009999999776482582,

"numThreads": 1,

"resetCriteria": 5,

"scaleFactor": 1,

"sensitivity": 10.0,

"type": 0,

"xDetectionCriteria": 10,

"yDetectionCriteria": 10

},

"objectDetector2": {

"classNames": [],

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"enable": false,

"frameBufferSize": 30,

"initString": "",

"logMode": 2,

"maxObjectHeight": 128,

"maxObjectWidth": 128,

"maxXSpeed": 30.0,

"maxYSpeed": 30.0,

"minDetectionProbability": 0.5,

"minObjectHeight": 4,

"minObjectWidth": 4,

"minXSpeed": 0.009999999776482582,

"minYSpeed": 0.009999999776482582,

"numThreads": 1,

"resetCriteria": 1,

"scaleFactor": 1,

"sensitivity": 10.0,

"type": 0,

"xDetectionCriteria": 2,

"yDetectionCriteria": 2

},

"objectDetector3": {

"classNames": [],

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"enable": true,

"frameBufferSize": 0,

"initString": "yolov7-tiny_640x640.onnx;640;640",

"logMode": 2,

"maxObjectHeight": 256,

"maxObjectWidth": 256,

"maxXSpeed": 0.0,

"maxYSpeed": 0.0,

"minDetectionProbability": 0.5,

"minObjectHeight": 4,

"minObjectWidth": 4,

"minXSpeed": 0.0,

"minYSpeed": 0.0,

"numThreads": 0,

"resetCriteria": 0,

"scaleFactor": 0,

"sensitivity": 0.0,

"type": 0,

"xDetectionCriteria": 0,

"yDetectionCriteria": 0

},

"objectDetector4": {

"classNames": [],

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"enable": false,

"frameBufferSize": 0,

"initString": "",

"logMode": 0,

"maxObjectHeight": 0,

"maxObjectWidth": 0,

"maxXSpeed": 0.0,

"maxYSpeed": 0.0,

"minDetectionProbability": 0.0,

"minObjectHeight": 0,

"minObjectWidth": 0,

"minXSpeed": 0.0,

"minYSpeed": 0.0,

"numThreads": 0,

"resetCriteria": 0,

"scaleFactor": 0,

"sensitivity": 0.0,

"type": 0,

"xDetectionCriteria": 0,

"yDetectionCriteria": 0

},

"videoFilter1": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"level": -1.0,

"mode": 0,

"type": -1

},

"videoFilter2": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"level": 1.0,

"mode": 0,

"type": 0

},

"videoFilter3": {

"custom1": 0.0,

"custom2": 0.0,

"custom3": 0.0,

"level": 0.0,

"mode": 0,

"type": 0

},

"videoFilter4": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"level": 0.0,

"mode": 0,

"type": 0

},

"videoFilter5": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"level": 62.5,

"mode": 0,

"type": -1

},

"videoSource": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"exposureMode": 0,

"focusMode": 0,

"fourcc": "BGR24",

"fps": 30.0,

"gainMode": -1,

"height": 720,

"logLevel": 0,

"roiHeight": 0,

"roiWidth": 0,

"roiX": 0,

"roiY": 0,

"source": "test.mp4",

"width": 1280

},

"videoStabiliser": {

"aFilterCoeff": 0.8999999761581421,

"aOffsetLimit": 10.0,

"constAOffset": 0.0,

"constXOffset": 0,

"constYOffset": 0,

"cutFrequencyHz": 0.0,

"enable": false,

"fps": 30.0,

"logMod": 0,

"scaleFactor": 1,

"transparentBorder": true,

"type": 2,

"xFilterCoeff": 0.8999999761581421,

"xOffsetLimit": 150,

"yFilterCoeff": 0.8999999761581421,

"yOffsetLimit": 150

},

"videoTracker": {

"custom1": -1.0,

"custom2": -1.0,

"custom3": -1.0,

"frameBufferSize": 256,

"lostModeOption": 0,

"maxFramesInLostMode": 128,

"multipleThreads": false,

"numChannels": 3,

"rectAutoPosition": false,

"rectAutoSize": false,

"rectHeight": 72,

"rectWidth": 72,

"searchWindowHeight": 256,

"searchWindowWidth": 256,

"type": -1

}

}

}

The application parameters consists of only VPipeline parameters. Table 2 shows short description of parameters.

Table 2 - Application parameters.

| Parameter | Description |

|---|---|

| camera | Camera controller parameters. No used in the application. Dummy camera controller will be initialized. |

| lens | Lens controller parameters. No used in the application. Dummy lens controller will be initialized. |

| general | General video processing pipeline parameters. |

| objectDetector1 | First object detector parameters. Motion detector parameters. Gmd object will be initialized. |

| objectDetector2 | Second object detector parameters. Video changes detector parameters. Ged object will be initialized. |

| objectDetector2 | Second object detector parameters. DNN object detector parameters. DnnOpenCvDetector object will be initialized. |

| objectDetector4 | Fourth object detector parameters. No used in the application. Dummy object detector will be initialized. |

| videoFilter1 | First video filter parameters. Image flip module. ImageFlip object will be initialized. |

| videoFilter2 | Second video filter parameters. Digital zoom module. DigitalZoom object will be initialized. |

| videoFilter3 | Third video filter parameters. No used in the application. Dummy video filter will be initialized. |

| videoFilter4 | Fourth video filter parameters. Defog / dehaze module. Dehazer object will be initialized. |

| videoFilter5 | Fifth video filter parameters. Motion magnification module. MotionMagnificator object will be initialized. |

| videoSource | Video source parameters. Video capture module. VSourceOpenCv object will be initialized. Note: by default “source” filed has value “test.mp4” which means to open video file (test file located in static folder). If you will change value to “file dialog” the application will open file dialog to chose video. Also you can open camera by it’s number (“0”, “1” etc.) or your can open RTSP stream (“rtsp://192.168.1.100:7000/live”) (Note: in case RTSP stream video capture latency can be big). |

| videoStabiliser | Video stabilizer parameters. VStabiliserOpenCv object will be initialized. |

| videoTracker | Video tracker parameters. CvTracker object will be initialized. |

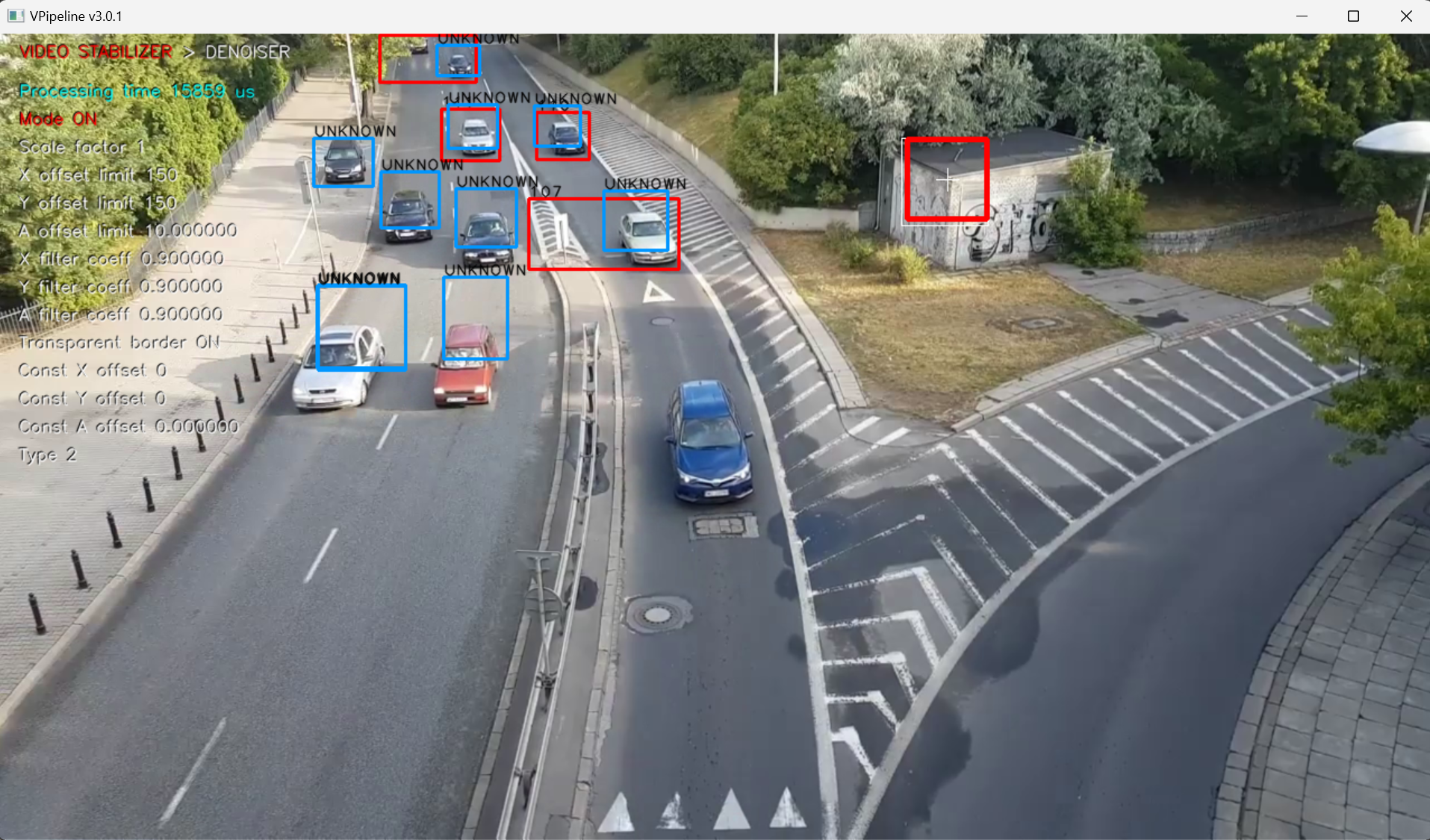

After starting the application (running the executable file) the user should select the video file in the dialog box (if parameter “videoSource” in config file is set to “file dialog”). After that the user will see the user interface as shown bellow. The window shows the original video with detection results and video tracker rectangle.

Control

To control the application, it is necessary that the main video display window was active (in focus), and also it is necessary that the English keyboard layout was activated without CapsLock mode. The program is controlled by the keyboard and mouse.

Table 3 - Control buttons.

| Button | Description |

|---|---|

| ESC | Exit the application. If video recording is active, it will be stopped. |

| ENTER | Start STOP playback mode. |

| R | Start/stop video recording. When video recording is enabled, a file dst_[date and time].avi (result video) is created in the directory with the application executable file. Recording is performed of what is displayed to the user. To stop the recording, press the R key again. During video recording, the application shows a warning message. |

| ↑ | Arrow down. Navigate through parameters for particular module. Active parameters will highlighted by red color. |

| ↓ | Arrow up. Navigate through parameters for particular module. Active parameters will highlighted by red color. |

| → | Arrow right. Navigate through video processing pipeline modules. |

| ← | Arrow left. Navigate through video processing pipeline modules. |

| + | Increase value of chosen parameter of particular module. |

| - | Decrease value of chosen parameter of particular module. |

| W | Increase tracking rectangle height by 8 pixels. Can be changed in tracking mode. |

| S | Decrease tracking rectangle height by 8 pixels. Can be changed in tracking mode. |

| D | Increase tracking rectangle width by 8 pixels. Can be changed in tracking mode. |

| A | Decrease tracking rectangle width by 8 pixels. Can be changed in tracking mode. |

| T | Move the tracking rectangle UP by 4 pixels. |

| G | Move the tracking rectangle DOWN by 4 pixels. |

| F | Move the tracking rectangle LEFT by 4 pixels. |

| H | Move the tracking rectangle RIGHT by 4 pixels. |

| Q | Enable/disable tracking rectangle auto size. |

| E | Enable/disable tracking rectangle auto position. |

| Z | Adjust tracking rectangle size and position once. |

| X | Move tracking rectangle to the center. |

| 1 | Save current application params to file. It will be loaded next application launch. |

| Mouse left button | Capture object for tracking. |

| Mouse right button | Reset object tracking. |

Prepare compiled library files

If you want to compile and collect all libraries from VPipelineDemo repository with their header files on Linux you can create bach script in repository root folder which will collect all necessary files in one place. To do this compile VPipelineDemo and after make follow steps:

- Create bash script (your have to have installed nano editor):

cd VPipelineDemo

nano prepareCompiled

- Copy next text there:

#!/bin/bash

# Define the directory where you want to copy all .h files.

# Make sure to replace /path/to/destination with your actual destination directory path.

HEADERS_DESTINATION_DIR="./include"

LIB_DESTINATION_DIR="./lib"

# Check if the destination directory exists. If not, create it.

if [ ! -d "$HEADERS_DESTINATION_DIR" ]; then

mkdir -p "$HEADERS_DESTINATION_DIR"

fi

# Find and copy all .h files from the current directory to the destination directory.

# The "." specifies the current directory. Adjust it if you want to run the script from a different location.

find . -type f -name '*.h' -exec cp {} "$HEADERS_DESTINATION_DIR" \;

found_dir=$(find . -type d -name "nlohmann" -print -quit)

if [ -n "$found_dir" ]; then

cp -r "$found_dir" "$HEADERS_DESTINATION_DIR"

echo "Directory nlohmann has been copied to $HEADERS_DESTINATION_DIR."

fi

# Check if the destination directory exists. If not, create it.

if [ ! -d "$LIB_DESTINATION_DIR" ]; then

mkdir -p "$LIB_DESTINATION_DIR"

fi

# Find and copy all .a files from the current directory to the destination directory.

# The "." specifies the current directory. Adjust it if you want to run the script from a different location.

find . -type f -name '*.a' -exec cp {} "$LIB_DESTINATION_DIR" \;

- Save “Crtr + S” and close “Ctrl + X”.

- Copy make file executable and run:

sudo chmod +x prepareCompiled

./prepareCompiled